Kubernetes Networking 101

A Getting-Started Guide for Cloud-native Service CommunicationA Refresh: Kubernetes BasicsKubernetes Networking: Types, Requirements, and ImplementationsWhat is a Service Mesh?Cross-cloud Service MeshesService Mesh Control Plane and Data PlaneIstioUsing Istio in Practice with Kubernetes ClustersKey Takeaways about Kubernetes Networking

Summary

In this blog post, we provide a starting point for understanding the networking model behind Kubernetes and how to make Kubernetes networking simpler and more efficient.

A Getting-Started Guide for Cloud-native Service Communication

As the complexity of microservice applications continues to grow, it’s becoming extremely difficult to track and manage interactions between services. Understanding the network footprint of applications and services is now essential for delivering fast and reliable services in cloud-native environments. Networking is not evaporating into the cloud but instead has become a critical component that underpins every part of modern application architecture.

If you are at the beginning of the journey to modernize your application and infrastructure architecture with Kubernetes networking, it’s important to understand how service-to-service communication works in this new world. This blog post is a good starting point to understand the networking model behind Kubernetes and how to make things simpler and more efficient.

(For container cluster networking, there is the Docker model and the Kubernetes model. In this blog, we will focus on discussing Kubernetes.)

A Refresh: Kubernetes Basics

Let’s first review a few basic Kubernetes concepts:

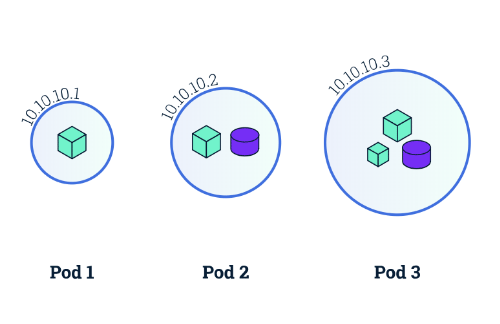

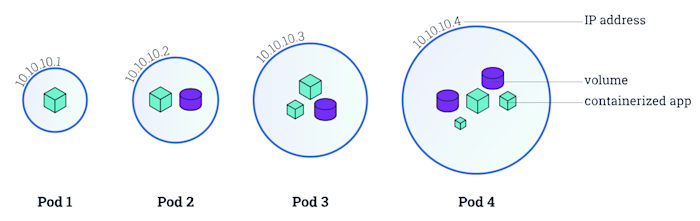

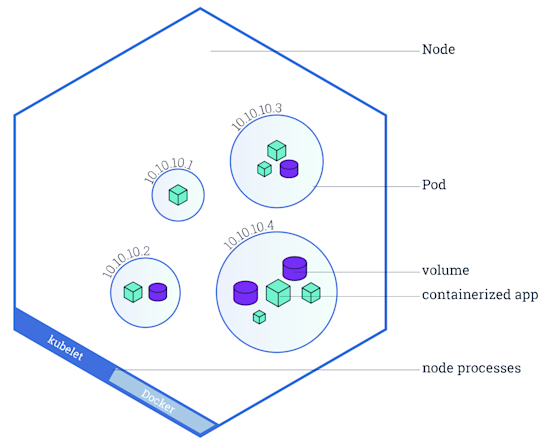

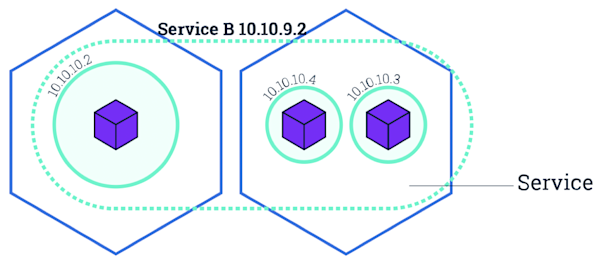

Pod: “A pod is the basic building block of Kubernetes,” which encapsulates containerized apps, storage, a unique network IP address, and instructions on how to run those containers. Pods can either run a single containerized app or run multiple containerized apps that need to work together. (“Containerized app” and “container” will be used interchangeably in this post.)

Node: A node is a worker machine in Kubernetes. It may be a VM or physical machine. Each node contains the services necessary to run pods and is managed by Kubernetes master components.

Service/Micro-service: A Kubernetes Service is an abstraction which defines a logical set of pods and a policy by which to access them. Services enable loose coupling between dependent pods.

Kubernetes Networking: Types, Requirements, and Implementations

There are several layers of networking that operate in a Kubernetes cluster. That means there are distinct networking problems to solve for different types of communications:

- Container-to-container

- Pod-to-pod

- Pod-to-service

- External-to-service

Sometimes, 1 & 2 are grouped as “pod networking” and 3 & 4 as “service networking.”

In the Kubernetes networking model, in order to reduce complexity and make app porting seamless, a few rules are enforced as fundamental requirements:

- Containers can communicate with all other containers without NAT.

- Nodes can communicate with all containers without NAT, and vice-versa.

- The IP that a container sees itself as is the same IP that others see it as.

This set of requirements significantly reduces the complexity of network communication for developers and lowers the friction porting apps from monolithic (VMs) to containers. However, this type of networking model with a flat IP network across the entire Kubernetes cluster creates new challenges for network engineering and operates teams. This is one of the reasons we see so many network solutions and implementations that are available and documented in kubernetes.io today.

While meeting the three requirements above, each of the following implementations tries to solve different kinds of networking problems:

- Application Centric Infrastructure (Cisco) - provides container networking integration for ACI

- AOS (Apstra) - enables Kubernetes to quickly change the network policy based on application requirements

- Big Cloud Fabric (Big Switch Networks) - is designed to run Kubernetes in private cloud/on-premises environments

- CNI-Genie (Huawei) - CNI is short for “Container Network Interface;” this implementation enables Kubernetes to simultaneously have access to different implementations of the Kubernetes network model in runtime

- Cni-ipvlan-vpc-k8s - enables Kubernetes deployment at scale within AWS

- Cilium (Open Source) - provide secure network connectivity between application containers

- Contiv (Open Source) - provides configurable networking (e.g., native l3 using BGP, overlay using VXLAN, classic L2, or Cisco-SDN/ACI) for various use cases

- Contrail / Tungsten Fabric (Juniper) - provides different isolation modes for virtual machines, containers/pods, and bare metal workloads

- DANM (Nokia) - is built for telco workloads running in a Kubernetes cluster

- Flannel - a very simple overlay network that satisfies the Kubernetes requirements

- Google Compute Engine (GCE) - all pods can reach each other and can egress traffic to the internet

- Jaguar (OpenDaylight) - provides overlay network with one IP address per pod

- Knitter (ZTE) - provides the ability of tenant management and network management

- Kube-router (CloudNativeLabs) - provides a Linux LVS/IPVS-based service proxy, a Linux kernel forwarding-based pod-to-pod networking solution with no overlays

- L2 networks and Linux bridging

- Multus (a Multi Network plugin) - supports the Multi Networking feature

- NSX-T (VMWare) - provide network virtualization for a multi-cloud and multi-hypervisor environment

- Virtualized Cloud Services (VCS) (Nuage Networks)

- OpenVSwitch - to enable network automation through programmatic extension, while still supporting standard management interfaces and protocols

- Open Virtual Networking (OVN) (Open vSwitch community)

- Project Calico (Open Source) - a container networking provider and network policy engine

- Romana (Open Source) - a network and security automation solution that lets you deploy Kubernetes without an overlay network

- Weave Net (Weaveworks) - a resilient and simple-to-use network for Kubernetes and its hosted applications

Among all these implementations, Flannel, Calico, and Weave Net are probably the most popular ones that are used as network plugins for the Container Network Interface (CNI). CNI, as its name implies, can be seen as the simplest possible interface between container runtimes and network implementations, with the goal of creating a generic plugin-based networking solution for containers. As a CNCF project, it’s now become a common interface industry standard.

Some quick and additional information about Flannel, Calico, and Weave Net:

- Flannel: Flannel can run using several encapsulation backends with VxLAN being the recommended one. Flannel is a simple option to deploy, and it even provides some native networking capabilities such as host gateways.

- Calico: Calico is not really an overlay network, but can be seen as a pure IP networking fabric (leveraging BGP) in Kubernetes clusters across the cloud. It is a network solution for Kubernetes and is described as simple, scalable and secure.

- Weave Net: Weave Net is a cloud-native networking toolkit that creates a virtual network to connect containers across multiple hosts and enable automatic discovery Weave Net also supports VxLAN.

What is a Service Mesh?

Application developers usually assume that the network below layer 4 “just works.” They want to handle service communication in Layers 4 through 7 where they often implement functions like load-balancing, service discovery, encryption, metrics, application-level security and more. If the operations team does not deliver these services within the infrastructure, developers will often roll up their sleeves and fill this gap by changing their code in the application. The downsides are obvious:

- Repeating the same work across different applications

- Disorganized code base that blends both application and infrastructure functions

- Inconsistent policies between services for routing, security, resiliency, monitoring, etc.

- Potential troubleshooting nightmares

…And that’s where service meshes come in.

One of the main benefits of a service mesh is that it allows operations teams to introduce monitoring, security, failover, etc. without developers needing to change their code.

So far, the most quoted definition for service mesh is probably from William Morgan, Buoyant CEO:

“A service mesh is a dedicated infrastructure layer for handling service-to-service communication. It’s responsible for the reliable delivery of requests through the complex topology of services that comprise a modern, cloud-native application. In practice, the service mesh is typically implemented as an array of lightweight network proxies that are deployed alongside application code, without the application needing to be aware.”

With service meshes, developers can bring their focus back to their primary task of creating business value with their applications, and have the operations team take responsibility for service management on a dedicated infrastructure layer (sometimes called “Layer 5”). This way, it’s possible to maintain consistent and secure communication among services.

Cross-cloud Service Meshes

A cross-cluster service mesh is a service mesh that connects workloads running on different Kubernetes clusters — and potentially standalone VMs. When connecting those clusters across multiple cloud providers — we now have a cross-cloud service mesh. However, from a technical standpoint, there is little difference between a cross-cluster and a cross-cloud service mesh.

The cross-cloud service mesh brings all the benefits of a general service mesh but with the additional benefit of offering a single view into multiple clouds. (Learn more about this topic in our post on Kubernetes and Cross-cloud Service Meshes.)

Service Mesh Control Plane and Data Plane

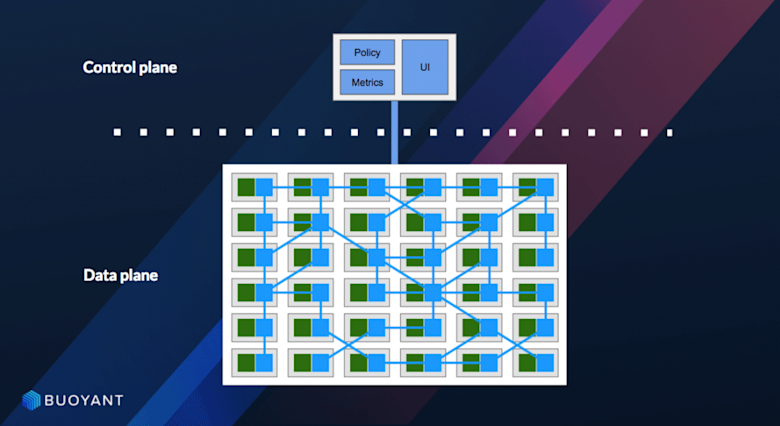

Familiar to most network engineers, service meshes have two planes: a control plane and a data plane (a.k.a. forwarding plane). If traditional networking planes focus on routing and connectivity, the service mesh planes focus more on application communication such as HTTP, gRPC, etc.

The data plane basically touches every data packet in the system to make sure things like service discovery, health checking, routing, load balancing, and authentication/authorization work.

The control plane acts as a traffic controller of the system that provides a centralized API that decides traffic policies, where services are running, etc. It basically takes a set of isolated stateless sidecar proxies and turns them into a distributed system.

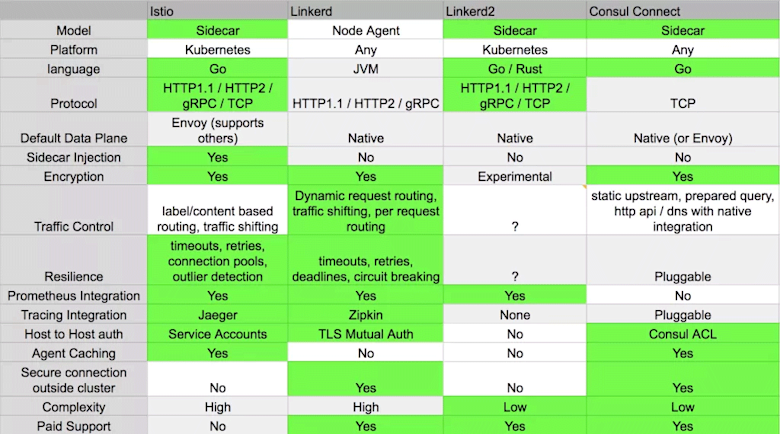

There are many service mesh solutions out there, the most-used are probably Istio, Linkerd, Linkerd2 (formerly Conduit), and Consul Connect. All of those are 100% Kubernetes-specific. Below is a comparison table from the Kubedex community if you want to get nerdier on this topic.

Istio

As it’s probably the most popular service mesh architecture today, let’s take a closer look into Istio.

Istio is an open platform that lets you connect, secure, control, and observe services in large hybrid and multi-cloud deployments. Istio has strong integration with Kubernetes, plus it has backing from Google, Lyft, and IBM. It also has good traffic management and security features. All of these have helped Istio to gain strong momentum in the cloud-native community.

The design goal behind the Istio architecture is to make the system “capable of dealing with services at scale and with high performance,” according to the Istio website.

Using Istio in Practice with Kubernetes Clusters

Now let’s take a look at simple examples of a Kubernetes cluster with and without Istio, so we can look at the benefits of installing Istio without any changes to the application code running in the cluster.

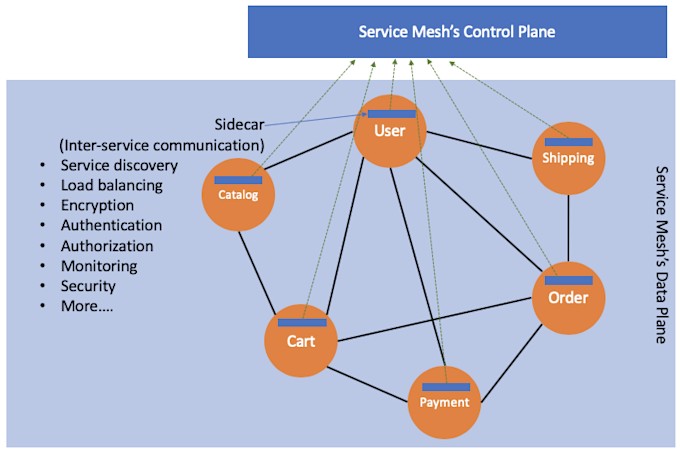

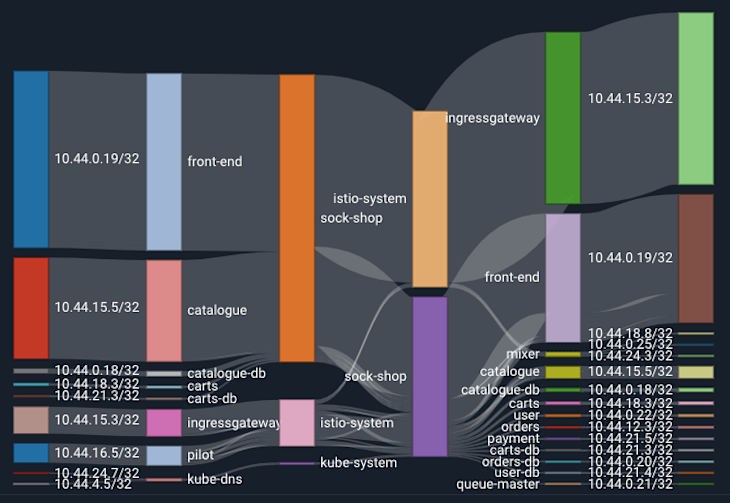

Take this Sockshop for example: it’s a demonstration e-commerce site that sells fancy socks online. There are a few microservices within this application: “catalog,” “cart,” “order,” “payment,” “shipping,” “user,” and so on. Because each microservice needs to talk to others via remote procedure calls (RPCs), the application forms a “mesh of service communication” over the network given the distributed nature of microservices.

Without a dedicated layer to handle service-to-service communication, developers need to add a lot of extra code in each service to make sure the communication is reliable and secure.

With Istio installed, all of the microservices will be deployed as containers with a sidecar proxy container (e.g., Envoy) alongside. A sidecar ”intercepts all network communication between microservices, then configures and manages Istio using its control plane functionality.” With no changes to the application itself, even if all the components of the application are written in different languages. Istio is well-positioned to generate detailed metrics, logs, and traces about service-to-service communication, policy enforcement, mutual authentication, as well as providing thorough visibility throughout the mesh.

Plus, it’s straightforward to get Istio setup in just a few steps without extra configuration (See the Quick Start Guide). This ease of deployment has also been one of Istio’s differentiators compared to other service mesh architectures.

Key Takeaways about Kubernetes Networking

Networking that underlies service communication has never been so critical. Because modern applications are so agile and dynamic, networks are a key part of ensuring application availability.

Service meshes and the Kubernetes Networking Model are a sweet combination that can make life easier for developers, and can make applications more portable across different types of infrastructure. Meanwhile, ops teams get a great way to make service-to-service communication easier and more reliable.

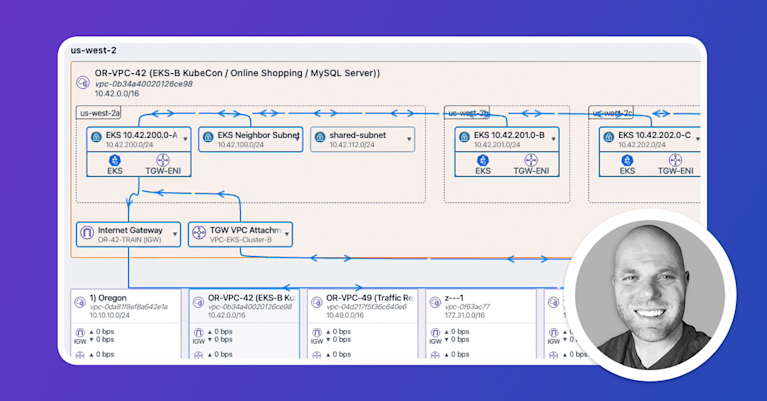

At Kentik, we embrace cutting-edge technology such as Kubernetes and service meshes even as they continue to evolve. Today, Istio metrics are one of many data sources ingested by the Kentik platform to enrich network flow data with service mesh context to provide complete observability for cloud-native environments.

Explore more about Kentik’s “Container Networking” use cases, Kentik Kube for Kubernetes Networking, or reach out to us to tell us about your infrastructure transformation journey into cloud-native architecture.