Posts by Ian Pye

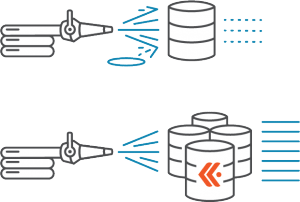

Kentik Detect is powered by Kentik Data Engine (KDE), a massively-scalable distributed HA database. One of the challenges of optimizing a multitenant datastore like KDE is to ensure fairness, meaning that queries by one customer don’t impact performance for other customers. In this post we look at the algorithms used in KDE to keep everyone happy and allocate a fair share of resources to every customer’s queries.

Kentik Detect handles tens of billions of network flow records and many millions of sub-queries every day using a horizontally scaled distributed system with a custom microservice architecture. Instrumentation and metrics play a key role in performance optimization.

For many of the organizations we’ve all worked with or known, SNMP gets dumped into RRDTool, and NetFlow is captured into MySQL. This arrangement is simple, well-documented, and works for initial requirements. But simply put, it’s not cost-effective to store flow data at any scale in a traditional relational database.

Relational databases like PostgreSQL have long been dominant for data storage and access, but sometimes you need access from your application to data that’s either in a different database format, in a non-relational database, or not in a database at all. As shown in this “how-to” post, you can do that with PostgreSQL’s Foreign Data Wrapper feature.