Closing the Network Performance Monitoring Gap and Achieving Full Network Visibility

Summary

As Gartner Research Director Sanjit Ganguli pointed out last May, network performance monitoring appliances that were designed for the era of WAN-connected data centers leave significant blind spots when monitoring modern applications that are distributed across the cloud. In this post Jim Frey, VP Strategic Alliances, explores Ganguli’s analysis and explains how Kentik NPM closes the gaps.

Delivering NPM for Cloud and Digital Operations

On May 27 of this year, Gartner Research Director Sanjit Ganguli released a research note titled “Network Performance Monitoring Tools Leave Gaps in Cloud Monitoring.” It’s a fairly biting critique of the state of affairs in NPM. And I couldn’t agree more.

In the note, Ganguli makes a number of key points in explaining the gap in cloud monitoring. The first is that traditional NPM solutions are of a bygone era. To quote:

“Today’s typical NPMD vendors have their solutions geared toward traditional data center and branch office architecture, with the centralized hosting of applications.”

Until just a few years ago, enterprise networks were predominantly comprised solely of one or more private data centers connected to a series of campuses and branch offices by a private WAN that was based on MPLS VPN technology from a major telecom carrier. In those settings, you might drop NPM appliances into major data centers, perhaps directly connected to router or switch span ports, or via a tap or packet broker from the likes of a Gigamon or Ixia. You’d put a few others — I emphasize “few” because these appliances were and are not cheap — at other major choke points in and out of the network. Voila, you’ve covered (most of) your network. The devices would do packet capture, derive and crank out performance alerts and summary reports, and retain raw packets for a small window (usually just minutes), enabling closer examination if you could get back to them in time. Or you could spend the big $$ and get the stream-to-disk NPM appliances that might store packets a little longer (think hours instead of minutes).

The pervasive cloud

Today, cloud is a pervasive reality. Just to be clear, what do I mean by cloud? For one, cloud refers to the move to distributed application architectures, where components are no longer all resident on the same server or data center, but instead are spread across networks, commonly including the Internet, and are accessed via API calls. Monolithic applications running in an environment like the one described earlier are not quite gone yet, but they represent the architecture of the past.

Cloud also means that users aren’t just internal users. Digital business initiatives represent more and more of the revenues and profits for most commercial organizations, so users are now end-customers, audiences, clients, partners, and consumers, spread out across the Internet, and perhaps across the globe. The effect on network traffic is profound. As Ganguli says in his research note:

“Migration to the cloud, in its various forms, creates a fundamental shift in network traffic that traditional network performance monitoring tools fail to cover.”

Why can’t traditional NPM tools cover the new reality? For one, if you’re using an appliance, even a virtual one, you’re assuming that you can connect to and record packets from a network interface of some sort. That assumption worked in a traditional scenario when monolithic applications meant that there were relatively few points in the network where the LAN met the WAN and important communication was happening. But cloud realities break that assumption. There are way more connectivity points that matter. API calls may be happening between all sorts of components within a cloud that don’t have a distinct “network interface” that an appliance can attach to. Ganguli observes that:

“Packet analysis through physical or virtual appliances do not have a place to instrument in many public cloud environments.”

The fact is that physical appliances are downright irrelevant in the modern environments of web enterprises, SaaS companies, cloud service providers, and the enterprise application development teams that act like them. And even virtual appliances become impractical to employ effectively. The sheer number of connectivity points that matter means that you’d need to distribute physical and virtual appliances far more broadly than ever before. That sounds like a lip-smacking feast for NPM vendors. But because the cost is completely prohibitive, it has never been economically feasible for network managers to deploy very many NPM appliances.

The result is functional blindness, or to put it more mildly—it’s really hard to figure out what’s happening. In Ganguli’s words:

“Compute latency and communications latency become much harder to distinguish, forcing network teams to spend more time isolating issues between the cloud and network infrastructure.

In the new cloud reality, when you have an application performance problem that is impacting user experience you can’t easily tell if it’s the network or not. Network teams can no longer run around with manual tools like TCPDump and Wireshark, trying to figure things out. Further, this creates what is actually an existential problem, because digital business, revenues, profits, audiences, brand, and competitiveness all go down the tubes when user experience goes into the can. And if you’re a network manager, you’re not going to get the dreaded “3 AM call” from just the CIO, you’re also going to be hammered by the CMO, CRO, CFO, and CEO.

NPM for a new era

It’s high time for network performance monitoring to come into the cloud era. It’s now possible to get rich performance metrics from your key application and infrastructure servers, even components like HAProxy and NGINX load balancers. You can map those metrics against Internet routes (BGP) and location (GeoIP), and correlate them with billions of volumetric traffic flow details (NetFlow, sFlow, IPFIX) from network infrastructure (e.g. routers and switches). It’s even possible to store those details unsummarized for months and to get answers in seconds on sophisticated queries across multi-billion row datasets. All this enables you to recognize issues immediately, rapidly figure out if the problem is with the network, and determine what to do about it. But you can’t do any of it without the instrumentation of cloud-friendly monitoring and the scalability of big data.

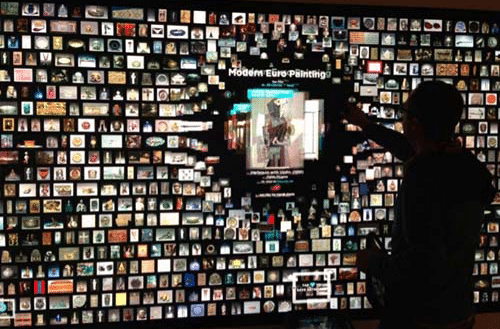

Kentik’s server-side NPM instrumentation goes wherever the servers go, in the data center or in the cloud.

At Kentik, we’ve added server-side NPM instrumentation that can go where the servers go, whether they are in the data center or in the cloud — any cloud, anywhere. The Kentik nProbe Host Agent examines packets as they pass through the NIC (or vNIC) and generates traffic activity and performance metrics that are forwarded to Kentik Detect for correlation and analytics. This puts end-point NPM data into the hands of the network pros, and instantly reveals what the actual network experience is from the viewpoint of the connected device. No more guessing or approximations from a bump somewhere on a wire that may or may not be anywhere near the actual server!

The agent we are using is worth a few more words. It’s developed and supported by ntop, and is the same technology that Boundary Networks used as a basis for its visionary offerings more than five years ago. Boundary was ahead of its time when it tried, unsuccessfully, to sell its solution to the nascent (and, from a networking perspective, unsophisticated) DevOps sector. But along the way, hundreds of these agents were deployed and delivered solid value to many, many organizations. We know this because several of those organizations have come to Kentik seeking a restoration of the visibility they lost when Boundary later pivoted in another direction and eventually wound down its operations.

With the advent of Kentik’s big data SaaS for network traffic analytics, monitoring, alerting, and defense, the data generated by nProbe becomes part of a unified overall solution that network engineers can use to ensure network performance.

Want to know more about the industry’s only Big Data-based SaaS NPM solution? Request a demo or start a free trial today.

For the latest on Gartner’s take on network performance monitoring, read the Gartner Market Guide for Network Performance Monitoring and Diagnostics, 2020, compliments of Kentik.