Summary

In the second of our data gravity series, Ted Turner examines how enterprises can address cost, performance, and reliability and help the data in their networks achieve escape velocity.

In an ideal world, organizations can establish a single, citadel-like data center that accumulates data and hosts their applications and all associated services, all while enjoying a customer base that is also geographically close. As this data grows in mass and gravity, it’s okay because all the new services, applications, and customers will continue to be just as close to the data. This is the “have your cake and eat it too” scenario for a scaling business’s IT.

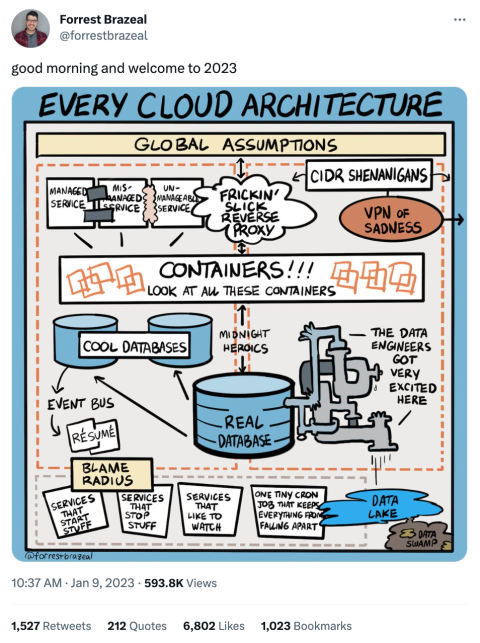

But what are network operators to do when their cloud networks have to be distributed, both architecturally and geographically? Suddenly, this dense data mass is a considerable burden, and the same forces that happily drew in service and customer data find that that data is now trapped and extremely expensive and complicated to move. As a cloud network scales, three forces compete against the acceptable accumulation of this gravity: cost, performance, and reliability.

In this second installment in my data gravity series, I want to examine how enterprises fight this gravity and help the data in their networks achieve escape velocity.

As a reminder, “escape velocity” (thanks Dave) in the context of cloud networks refers to the amount of effort required to move data between providers or services. Data mass, data gravity, and escape velocity are all directly related.

To see this played out, I want to use three discrete points along the cloud network lifecycle where many of today’s companies find themselves:

- Lift and shift

- Multizonal, hybrid

- Multi-cloud, global, observable

These stages do not, in my opinion, represent an evolution in complexity for complexity’s sake. They do, however, represent an architectural response to the central problem of data gravity.

All systems go

The lift and shift, re-deploying an existing code base “as is” into the cloud, is many organizations’ first step into cloud networking. This might mean a complete transition to cloud-based services and infrastructure or isolating an IT or business domain in a microservice, like data backups or auth, and establishing proof-of-concept.

Either way, it’s a step that forces teams to deal with new data, network problems, and potential latency. One of my personal experiences is with identity. My first experience breaking down applications into microservices and deploying in new data centers failed due to latency and data gravity in our San Diego data center. San Diego was where all of our customer data was stored. Dallas, Texas, is where our customer data was backed up for reliability/fault tolerance.

As we started our journey to refactor our applications into smaller microservices, we built a cool new data center based on the premise of hyperscaling. The identity team built the IAM application for high volume processing of customer logins and validation of transaction requests. We did not account for the considerable volume of logons at a distance.

San Diego is electricity poor, and our executives decided to build in a more energy-rich, mild climate with less seismic activity. We built inside the Cascade mountain range near the Seattle area.

What we found is that our cool new data center, built using the latest hyperscaler technologies, suffered from one deficiency – latency and distance from our customer data staged/stored in San Diego. The next sprint was to move the customer data to the new hyperscaler facility near Seattle. Once the customer data was staged next to the identity system, most applications worked great for the customers.

Over time, the applications were refactored to incorporate latency and slowly degrade in place, instead of failing outright, due to application tier latency (introduced by network distances).

The learning item here is that latency kills large data applications when customers (or staff) need to access their data live.

Second stage: Distributing your network’s data

How do IT organizations maintain cost, performance, and reliability targets as networks scale and cater to business and customer needs across a wider geographical footprint?

Let’s elaborate on the previous e-commerce example. The cloud architecture has matured as the company has grown, with client-facing apps deployed in several regional availability zones for national coverage. Sensitive data, backups, and analytics are being handled in their Chicago data center, with private cloud access via backbones like Express Route or Direct Connect to help efficiently move data out of the cloud and into the data center.

In terms of performance, the distribution into multiple zones has improved latencies and throughput and decreased data gravity in the network. With multiple availability zones and fully private backups, this network’s reliability has significantly improved. Though it must be said this still leaves the network vulnerable to a provider-wide outage.

Regarding cost, the improvements can be less clear at this stage. As organizations progress their digital transformation, it is not simply a matter of new tech and infrastructure. IT personnel structure will need to undergo a corresponding shift as service models change, needed cloud competencies proliferate, and teams start to leverage strategies like continuous integration and continuous delivery/deployment (CI/CD). These adaptations can be expensive at the onset. I wrote another series, Managing the hidden costs of cloud networking that outlines the many ways migrating to cloud networks can lead to unforeseen expenses.

Adapting to distributed scale

The tools and strategies needed to deploy and manage a multizonal network successfully are the building blocks for a truly scalable digital transformation. Once in place, this re-org of both IT architecture and personnel enables a dramatic increase in the size of datasets that network operators need to contend with.

There are many tools and concepts in this effort to distribute data gravity that many network and application engineers will already be familiar with:

- Load balancer

- Content delivery networks

- Queues

- Proxies

- Caches

- Replication

- Statelessness

- Compression

What do these have to do with data gravity? You’ll recall that throughput and latency are the two main drivers of data gravity. Essentially, these all serve to abstract either the distance or size of the data to maintain acceptable throughput and latency as the data’s point-of-use moves further and further away from your data centers or cloud zones.

It becomes imperative that network operators/engineers and data engineers work closely to think about where data is being stored, for how long, when and how often it is replicated or transformed, where it is being analyzed, and how it is being moved within and between the subnets, SD-WANs, VPNs, and other network abstractions that make up the larger network.

Reliability

Maintaining the integrity of networks has implications beyond uptime. Security, data correctness, and consistency mean that cloud networks must be highly reliable. This means the infrastructure and processes necessary to ensure that data stays available must be put in place before an incident occurs.

At scale, this reliability becomes one of the more significant challenges with data gravity. As we’ve discussed, moving, replicating, storing, and otherwise transforming massive data sets is cost prohibitive. Overcoming these challenges brings networks into their most mature, cloud-ready state.

A network of gravities

Reformatting personnel and IT to perform in a more distributed context improves application performance, sets the groundwork for cost-effective scaling, and creates a more reliable network with geographical redundancies.

Take a hypothetical organization that has scaled well off the multizonal, single-provider cloud architecture outlined in the previous section. As this organization has scaled off of this new framework, one of its leading competitors was brought down by a cloud provider outage. The outage took down one of their critical security services and exposed them to attack. Taking the hint, there came in our hypothetical organization’s board room a push to refactor the codebase and networking policies to support multi-cloud deployments. As with every previous step along this digital transformation, this new step introduces added complexity and strains against data gravity.

Another way of achieving multi-cloud deployments in 2023 is acquisitions. We see many current conglomerates acquiring technologies and customers which reside in cloud providers different from their core deployments.

The shift to multiple providers means significant code changes at the application level, additional personnel for the added proficiencies and responsibilities, the possibility of entirely new personnel abstractions like a platform or cloud operations team, and of course, the challenge of extraction, replication, and any other data operations that need to take place to move datasets into the new clouds.

Multi-cloud networks

For security, monitoring, and resource management, a fundamental principle in multi-cloud networks is microsegmentation. Like microservices, networks are divided into discrete units complete with their own compute and storage resources, dependencies, and networking policies.

Now take the same principles of microsegmentation when considering network access to resources worldwide for physical retail, office space, warehousing, and manufacturing. The resources reach into the cloud to find and store reference data (customer purchase history lookup, manufacturing CAD/CAM files, etc.). The complexity is dizzying!

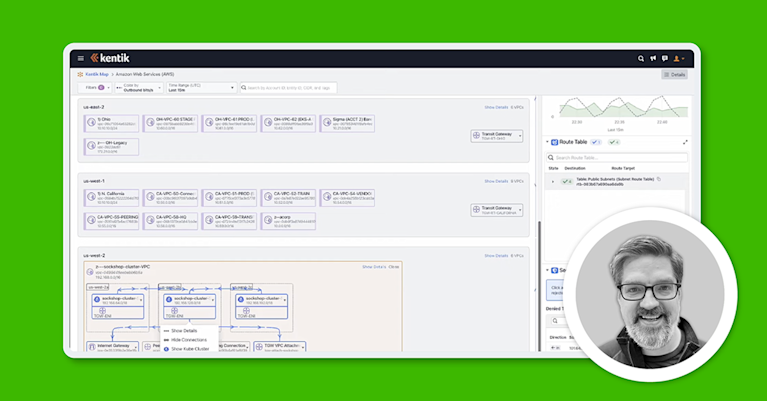

Managing data complexity with network observability

The tools and abstractions (data pipelines, ELT, CDNs, caches, etc.) that help distribute data in a network add significant complexity to monitoring and observability efforts. In the next installment of this series, we will consider how to leverage network observability to improve data management in your cloud networks.

In the meantime, check out the great features in Kentik Cloud that make it the leading network observability solution for today’s leading IT enterprises.