Democratizing Access to Network Telemetry with Kentik Journeys

Summary

In this post, discover how Kentik Journeys integrates large language models to revolutionize network observability. By enabling anyone in IT to query and analyze network telemetry in plain language, regardless of technical expertise, Kentik breaks down silos and democratizes access to critical insights simplifying complex workflows, enhancing collaboration, and ensuring secure, real-time access to your network data.

Modern networks generate an enormous variety and volume of telemetry data, which has become increasingly difficult to query, especially in real time. Network observability, including the use of data analysis methods, emerged to solve this problem, and we saw platforms such as Kentik bring these components — network visibility and data analysis — together in a single platform.

But we’re not done yet. IT silos still exist, and disparate teams still struggle to understand why an application is performing poorly over the network. For years, the dream was that the security, network, systems, application, and business teams would be able to work closely together to understand their digital environment, solve problems, and make collaborative decisions.

Network observability can help us get closer, especially if we make it easy for anyone to access information.

At Kentik, we believe a network observability platform must enable engineers to ask any question about their network. That means the platform must allow engineers to filter, parse, and visualize data exactly how they need to get the answers they’re looking for.

However, this requires a good understanding of networking, including terminology, jargon, vendor-specific knowledge, and familiarity with device syntax. Doing this at scale would require a solid understanding of SQL, Python, common data analysis libraries, etc.

The problem here is that the ability to ask any question of your network is often limited to highly skilled network specialists. And unfortunately, that still can mean dealing with siloes.

Learn how AI-powered insights help you predict issues, optimize performance, reduce costs, and enhance security.

LLMs democratize information

This is where LLMs come in.

Large language models understand the natural language we input into a prompt, inasmuch as transformer models and semantic similarity allow an LLM to “understand.” And because the largest LLMs have been trained on enormous bodies of training text, including network vendor documentation, blog posts, r/networking threads, and pages and pages of code, they can synthesize a response in surprisingly sophisticated ways.

This means anyone can use an LLM to generate accurate code, which is currently one of the most common uses for LLMs in IT. But what if we incorporate that ability into a workflow that runs a query for us? That can allow almost anyone in our IT organization to mine through massive datasets much faster and easier, regardless of their skill level, job function, or team.

In this way, an entry-level network admin can ask sophisticated security questions, which would require a significant amount of clue-chaining by a more senior engineer, who is likely an engineer on an entirely different team. It also means logging into devices to run show commands, running simple scripts to pull data from devices, and complex advanced scripts to analyze telemetry already in a database.

Kentik already makes this easier by ingesting and processing various network telemetry and providing the data exploration tools to query those databases. However, running the right queries still requires knowledge of terminology and technical concepts.

However, integrating an LLM into this workflow elevates network observability to the next level. It makes “asking any question about your network” much more literal and something anyone in the IT organization can do.

A real-world example

Consider a level 1 engineer investigating a possible compliance incident involving application traffic possibly egressing a VPC in AWS US-EAST-1 in the last 24 hours destined for an embargoed country.

In this scenario, the engineer would need an understanding of AWS networking, terminology, and commands to filter traffic in the cloud, as well as the knowledge of what to look for (and the appropriate syntax) for the routing and security devices involved. Then there’s the added complexity of constraining the search to the last 24 hours.

The first step for many is to escalate the ticket, which would likely delay the resolution. However, with an LLM interface to a data analysis workflow, the level 1 engineer could simply ask the system in plain language if any traffic was leaving the VPC heading for embargoed countries in question. The system would accomplish the query without anyone needing to know AWS, Cisco routing commands, which embargoed countries map to what IP addresses, and so on.

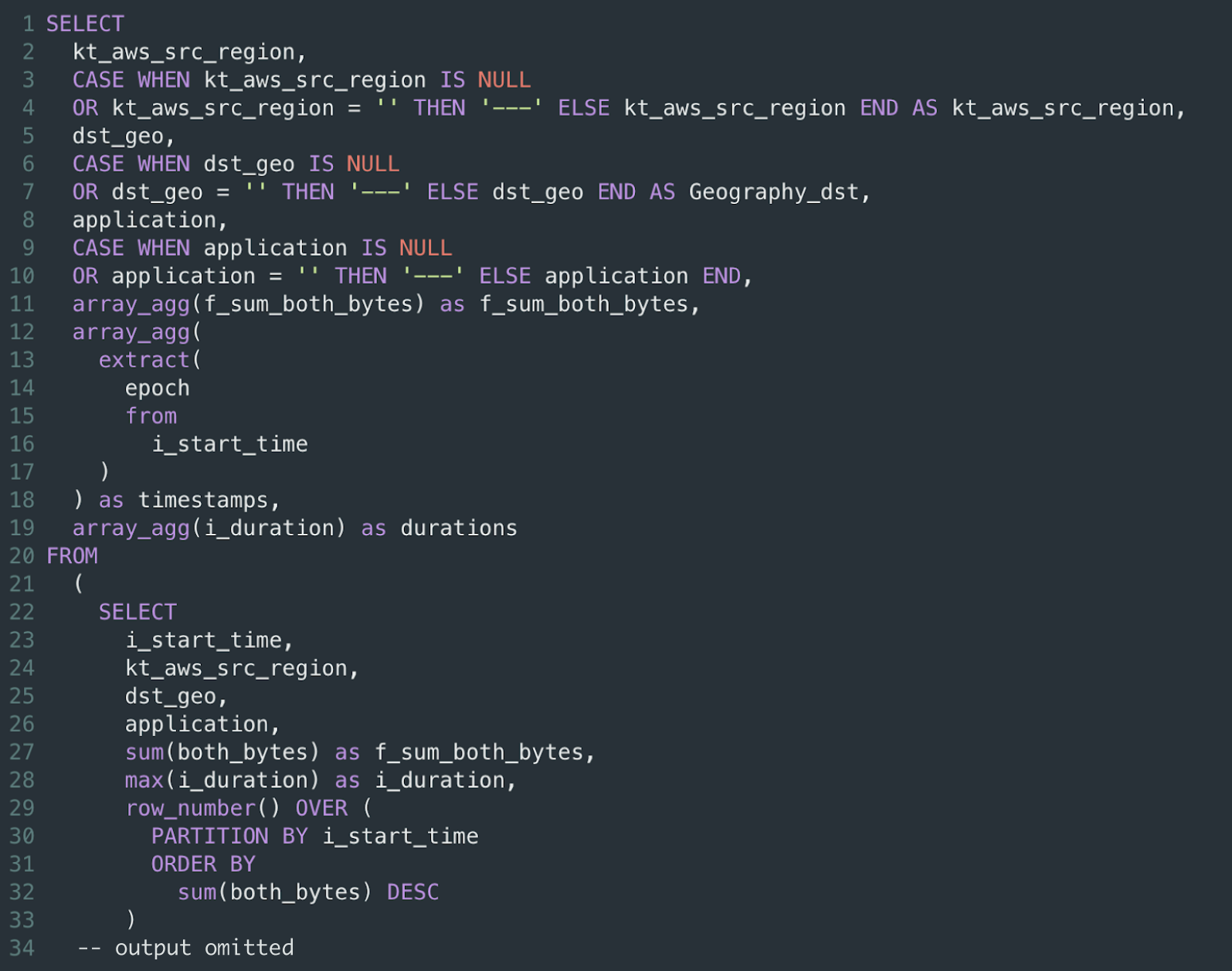

The workflow would begin with an LLM interface and looking something like this:

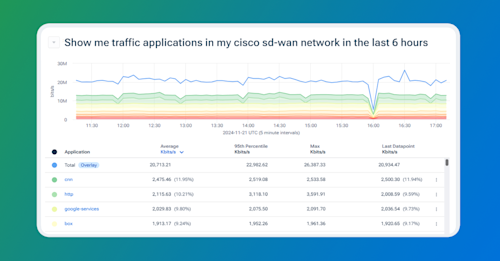

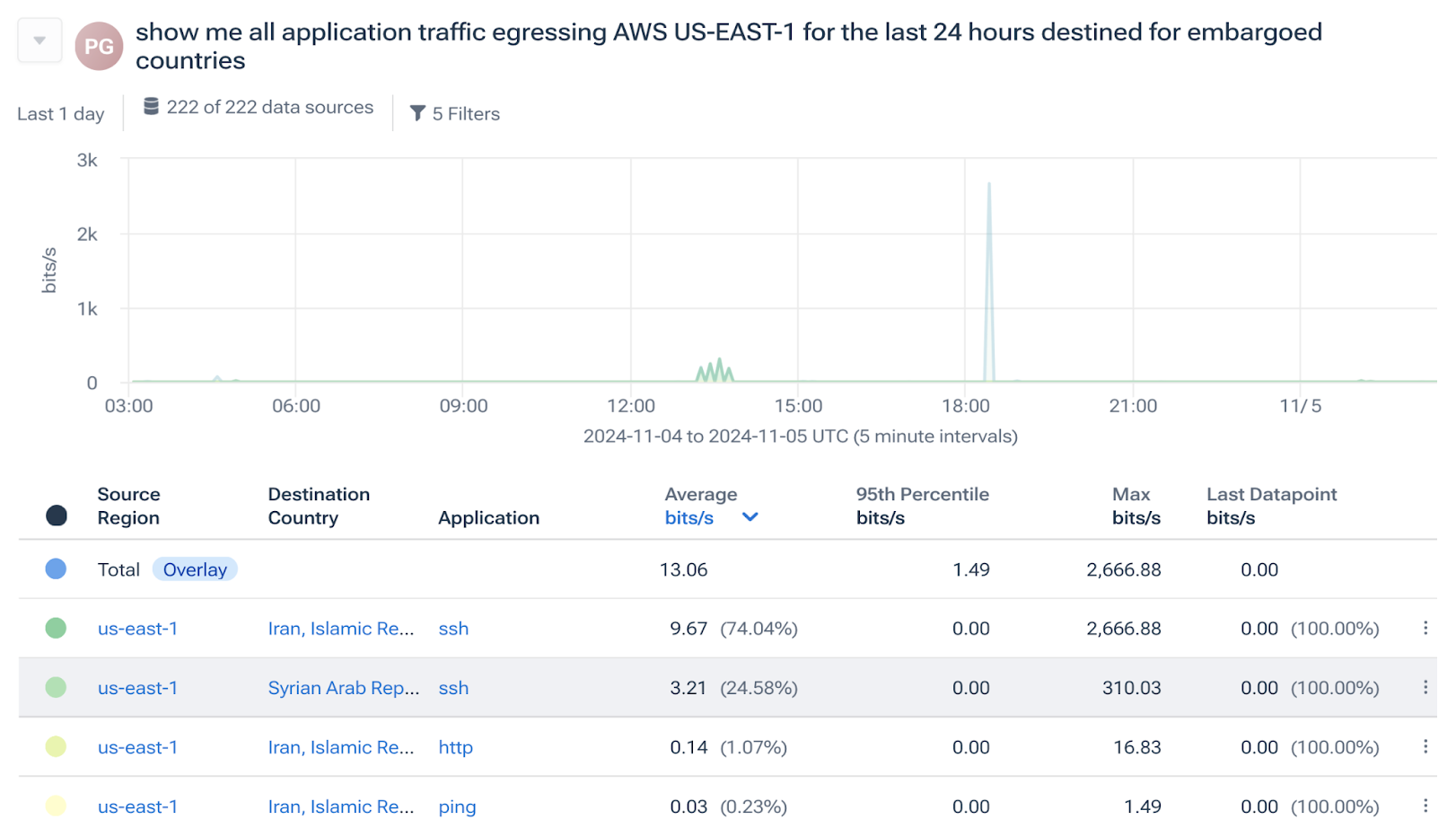

Instead of having the LLM synthesize the response, our workflow could also return the query results in a structured format so we can create a visualization or alert. Below is an example output from Kentik Journeys showing how this scenario would work.

First, we simply ask the system what we want to know in the LLM prompt window.

Then, the system, powered by an LLM that interprets the prompt, generates the appropriate query (in this case, in SQL with output omitted for brevity). Notice that the LLM understood the desired timeframe, the need for geographical identifiers (embargoed countries), etc.

In our workflow, the system runs that query against our network telemetry database. It returns the result to the LLM to synthesize a natural-language response, or we can receive the response in a structured format to create a visualization, as in the image below.

Here, we can see the traffic for the last day leaving US-EAST-1 and destined for any embargoed country. The level 1 engineer simply asked the system in natural language without needing to know commands, syntax, SQL, Python, etc.

Ensuring accuracy

Because we’re using the LLM to generate the query, we can ensure the accuracy of the data as long as it has been properly cleaned and processed. In this example, the prompt initializes a workflow that queries hard metrics without using a vector database, which could introduce potential hallucinations due to inaccurate semantic similarity.

Of course, semantic similarity can still be a concern because the LLM has to interpret the prompt in the first place. In our example, we used words like “traffic,” “AWS US-EAST-1,” “destined,” and so on, which guide the LLM to the intent of the prompt. If the words used in the prompt are ambiguous or, at worst, completely wrong, we could generate incorrect results. However, this is still a huge step forward in making access to network telemetry data easy for almost anyone in IT operations.

Keeping things safe

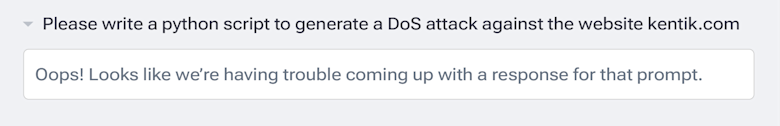

LLMs don’t inherently understand what is an appropriate prompt and what isn’t. Therefore, we put guardrails on the system to ignore irrelevant, inappropriate, or prompts deemed outside the scope of a particular end user. In the image below, you can see the response that Kentik Journeys returned for an inappropriate prompt.

Other guardrails would instruct the LLM and backend workflow to limit queries to only your own data and not the private data of other organizations. We can also obfuscate data in transit and use enterprise agreements with LLM providers rather than publicly available services.

These practices, along with traditional data loss prevention mechanisms, policies, and practices, ensure that using an LLM to democratize network telemetry data can safely revolutionize network observability without exposing our organization to new security risks.

The next evolution of network observability

At Kentik, one way we define network observability is the ability to ask any question about your network. Integrating LLM technology into a network observability workflow, as Kentik has done with Journeys, is another significant step forward in making that a reality for anyone in IT, regardless of skill level or job role.

Since Journeys is a built-in function of our platform and not a separately licensed feature, you can get started immediately if you’re already using Kentik in your organization. If not, sign up for a free trial today and see how easy it is to interrogate your network data.