Summary

In the early hours of Wednesday, January 25, Azure, Microsoft’s public cloud, suffered a major outage that disrupted their cloud-based services and popular applications such as Sharepoint, Teams, and Office 365. In this post, we’ll highlight some of what we saw using Kentik’s unique capabilities, including some surprising aftereffects of the outage that continue to this day.

In the early hours of Wednesday, January 25, Microsoft’s public cloud suffered a major outage that disrupted their cloud-based services and popular applications such as Sharepoint, Teams, and Office 365. Microsoft has since blamed the outage on a flawed router command which took down a significant portion of the cloud’s connectivity beginning at 07:09 UTC.

In this post, we’ll highlight some of what we saw using Kentik’s unique capabilities, including some surprising aftereffects of the outage that continue to this day.

Azure outage in synthetic performance tests

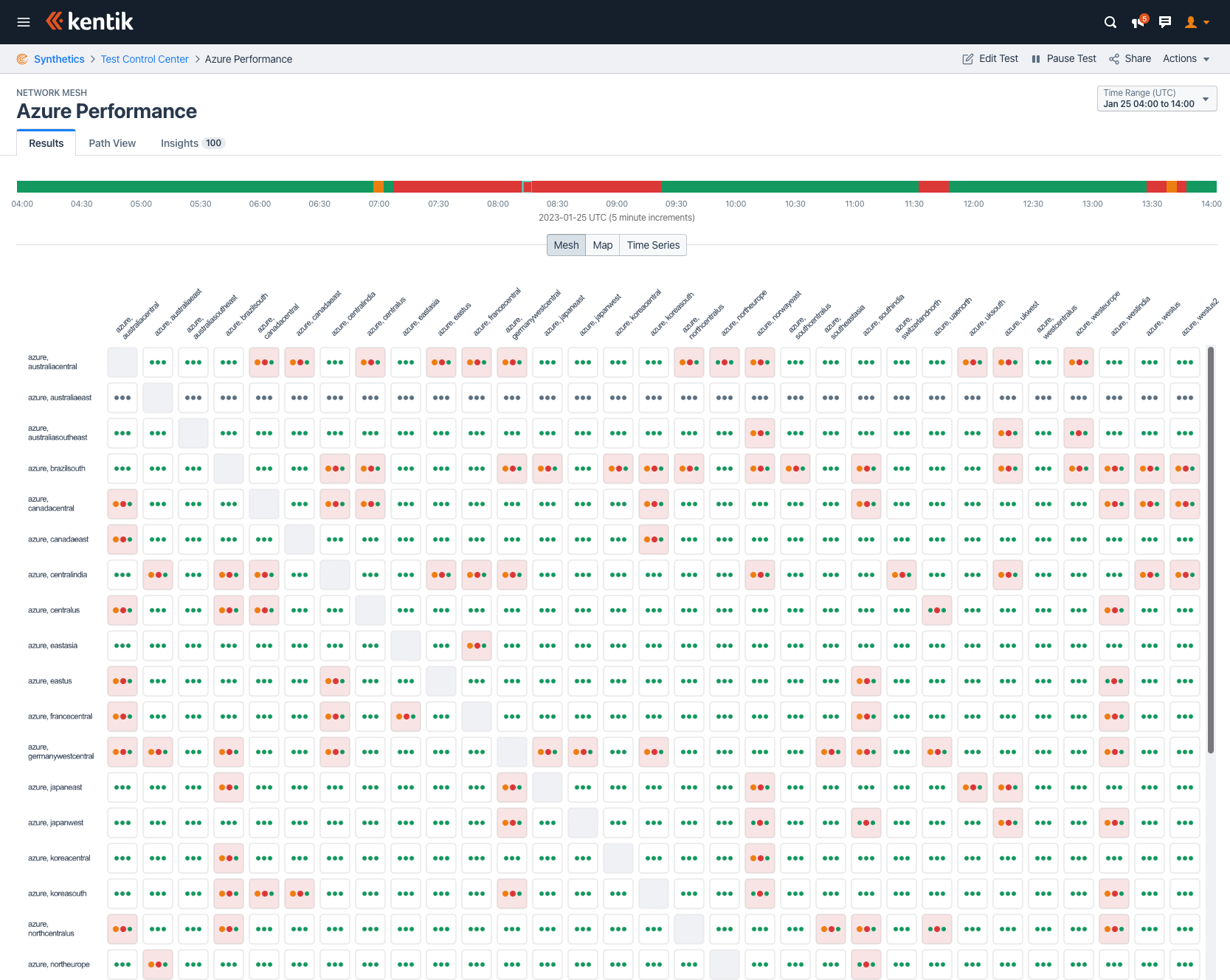

Kentik operates an agent-to-agent mesh for each of the public clouds — a portion of which is freely available to any Kentik customer in the Public Clouds tab of the State of the Internet page. When Azure began having problems last week, our performance mesh lit up like a Christmas tree with red alerts mixed in with green statuses. A partial view is shown below:

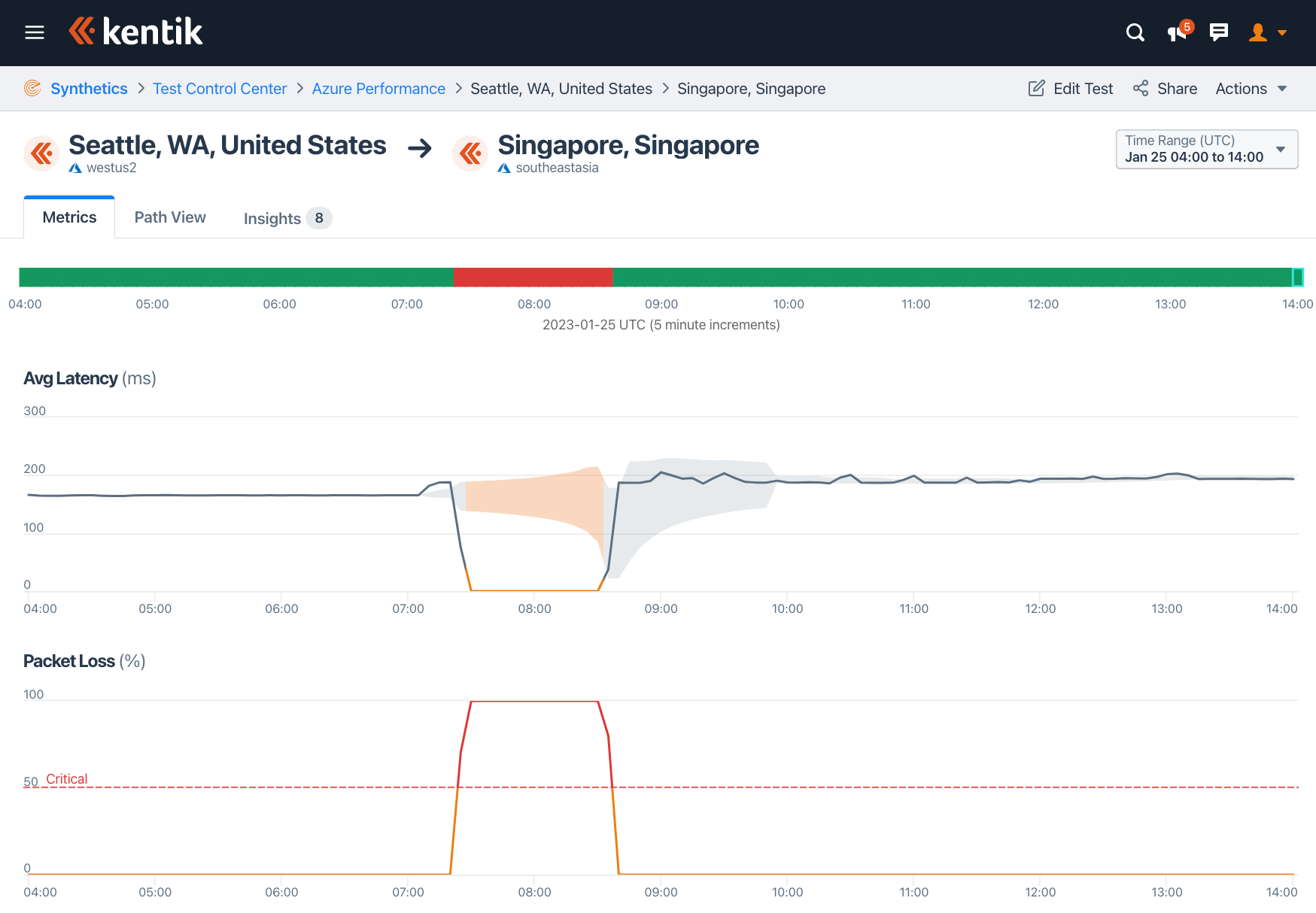

Clicking into any of these red cells takes the user to a view such as the one below, revealing latency, packet loss, and jitter between our agents hosted in Azure. The screenshot below shows a temporary disconnection from 07:20 to 08:40 UTC between Azure’s westus2 region in Seattle and its southeastasia region in Singapore.

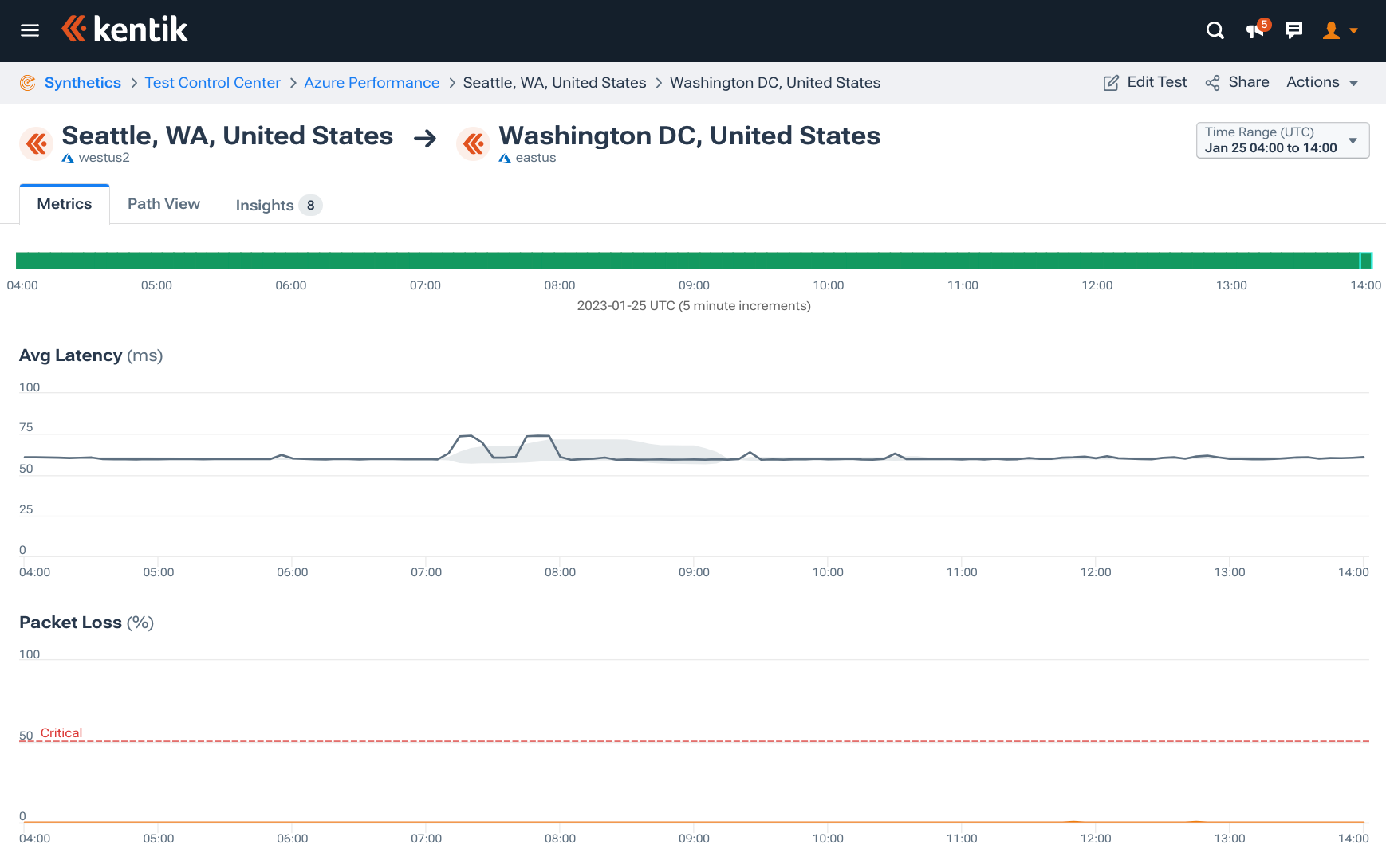

However, not all intra-Azure connections suffered disruptions like this. While westus2’s link to southeastasia may have gone down, its link to eastus in the Washington DC area only suffered minor latency bumps, which were within our alerting thresholds.

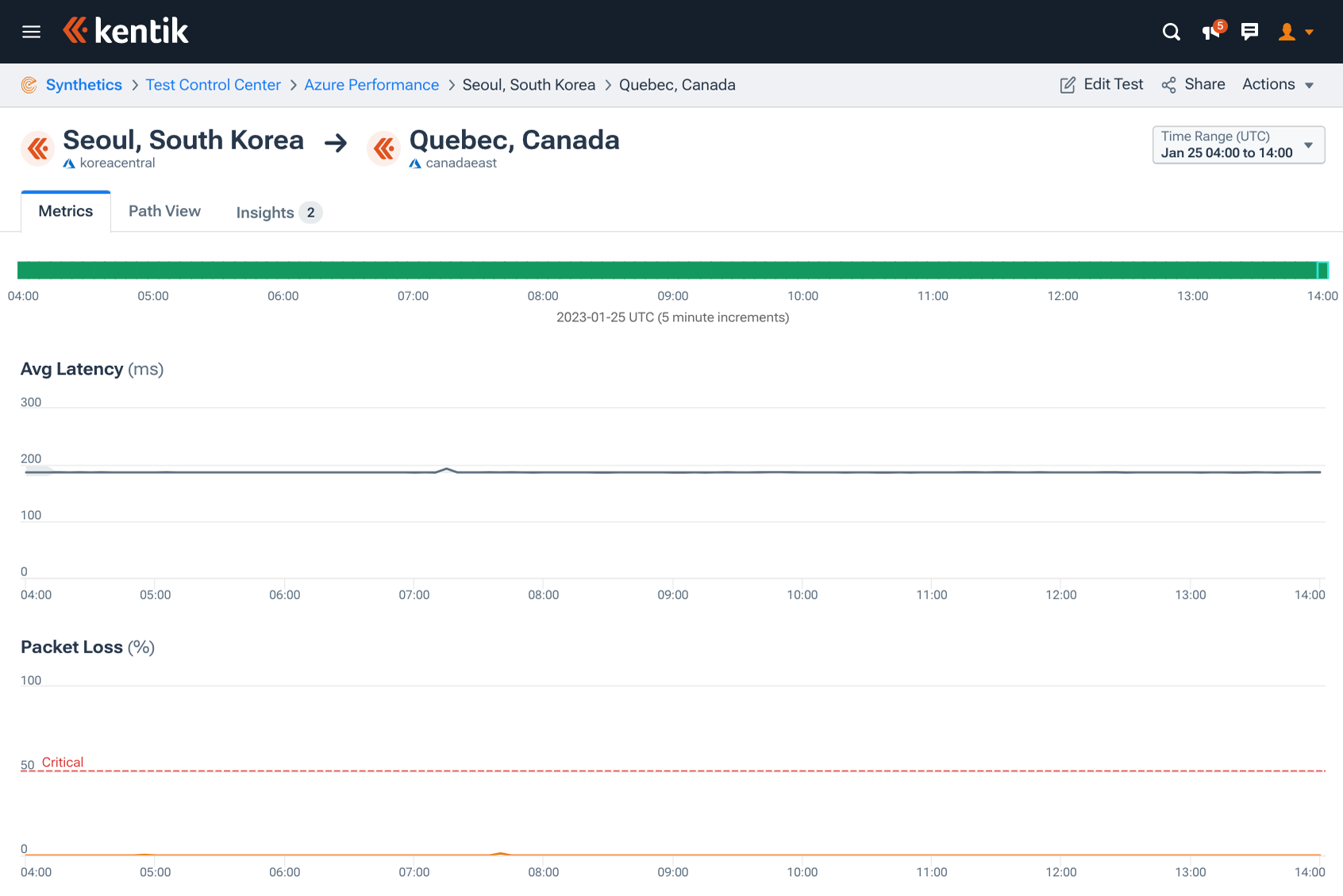

To give another curious example of the variety of impacts from this outage, consider these two equivalent measurements from different cities in South Korea to Canada. Tests between koreasouth in Busan and canadaeast in Quebec were failing between 07:50 and 08:25 UTC. At the same time, connectivity between koreacentral in Seoul and the same region in Canada hardly registered any impact at all.

It is hard to know exactly why some parts of Azure’s connectivity were impacted while others were not without intimate knowledge of their architecture, but we can surmise that Azure’s loss of connectivity varied greatly depending on the source and destination.

Azure outage in NetFlow

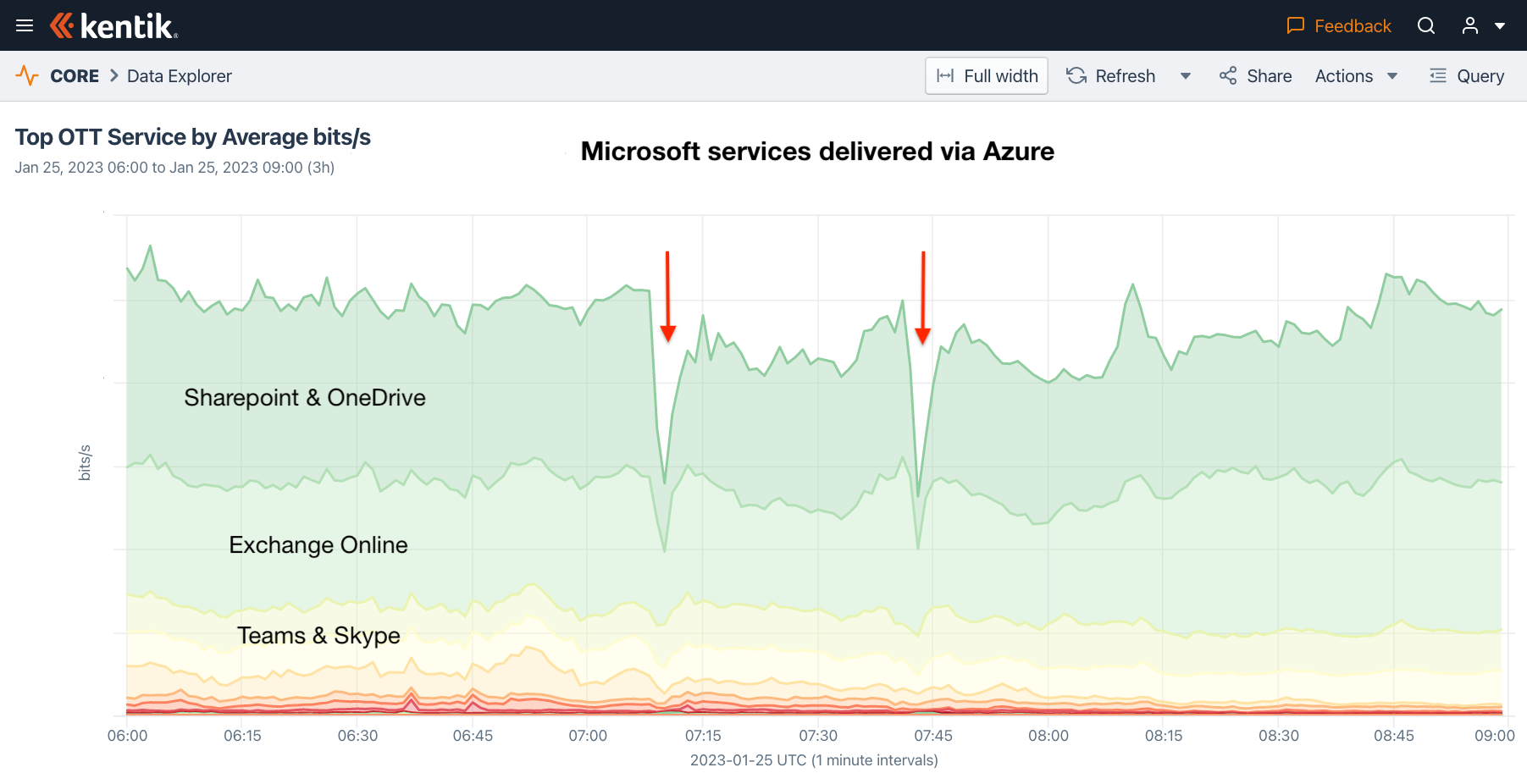

When we look at the impact of the outage on Microsoft services in aggregate NetFlow based on Kentik’s OTT service tracking, we arrive at the graphic below, which shows two distinct drops in traffic volume at 07:09 UTC and 07:42 UTC and an overall decreased level of traffic volume until 08:43 UTC.

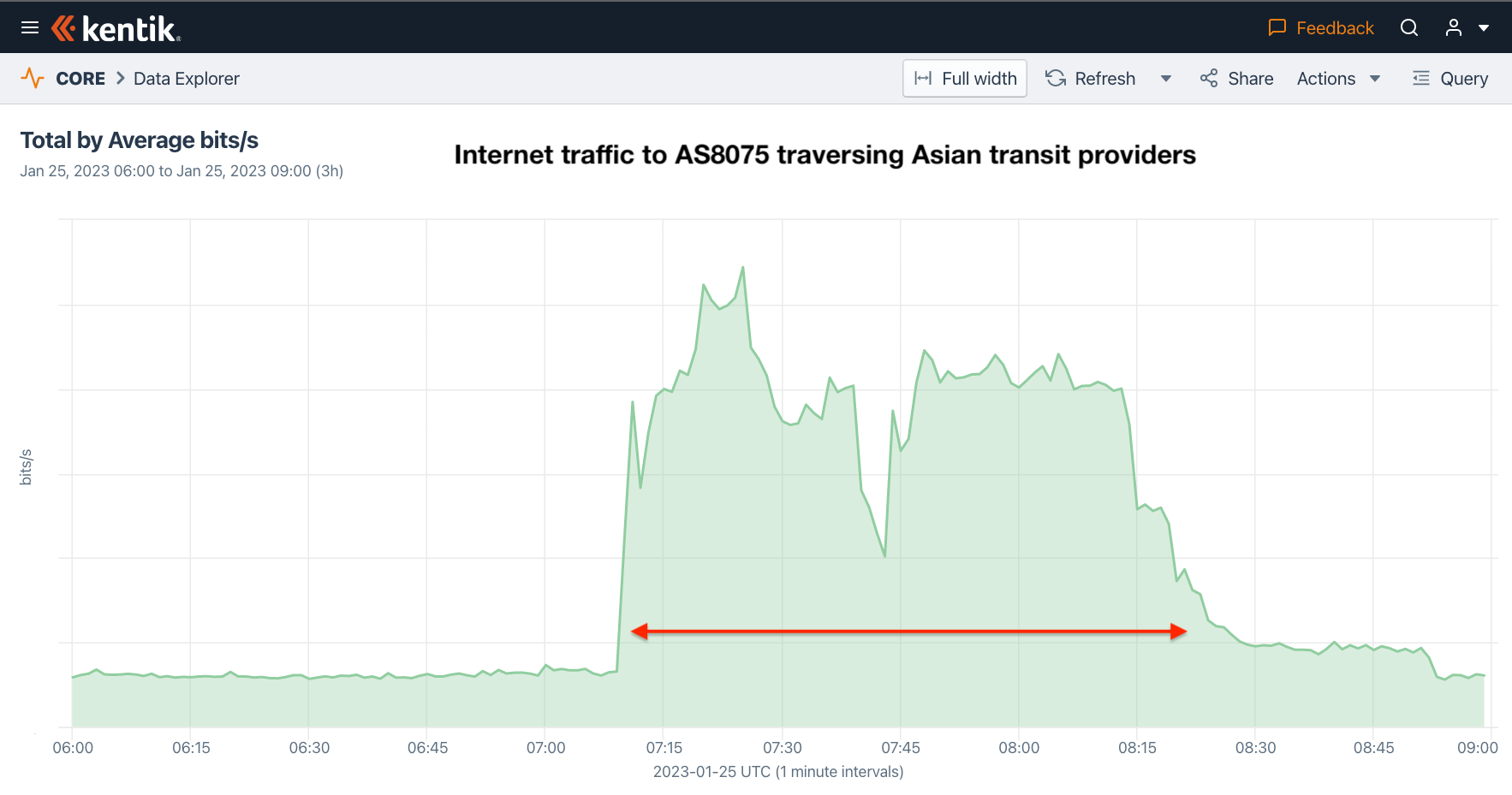

Given the time of day of the outage (07:09 UTC), the impact was more disruptive in East Asia. If we look at the aggregate NetFlow from our service provider customers in Asia to Microsoft’s AS8075, we see a surge in traffic. Why, you might ask, would there be a surge in traffic during an outage?

This has to do with the fact that many peering sessions with AS8075 were disrupted during the outage and traffic shifted to traversing transit links and onto the networks of Kentik’s customers in Asia. We touch on this phenomenon again in the next section.

Azure outage in BGP

And finally, let’s take a look at this outage from the perspective of BGP. Microsoft uses a few ASNs to originate routes for its cloud services. AS8075 is Microsoft’s main ASN, and it originates over 370 prefixes. In this incident, 104 of those prefixes exhibited no impact whatsoever — see 45.143.224.0/24, 2.58.103.0/24, and 66.178.148.0/24 as examples.

An effective BGP configuration is pivotal to controlling your organization’s destiny on the internet. Learn the basics and evolution of BGP.

However, the other prefixes originated by Microsoft exhibited a high degree of instability, indicating that something was awry inside the Azure cloud. Let’s take a look at a couple of examples.

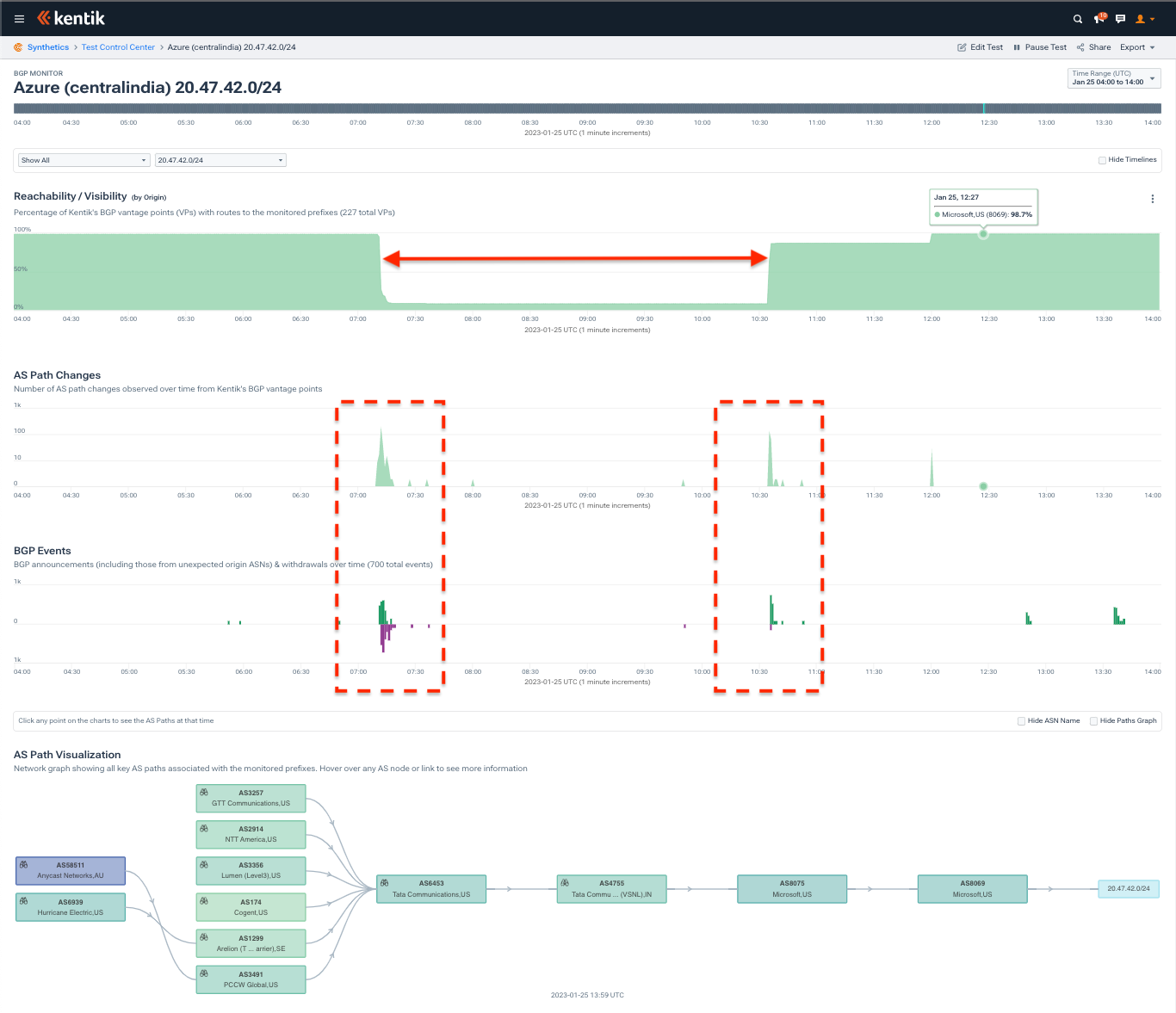

Consider the plight of 20.47.42.0/24, which is used at Azure’s centralindia region located in Pune, India. Kentik’s BGP monitoring, pictured below, presents three time series statistics above a ball-and-stick AS-level diagram.

In this particular case, the prefix was withdrawn at 07:12 and later restored at 10:36 UTC. The upper plot displays the percentage of our BGP sources that had 20.47.42.0/24 in their routing tables over this time period — the outage is marked with an arrow. The route withdrawals and re-announcements are tallied in the BGP Events timeline and contribute to corresponding spikes of AS Path Changes.

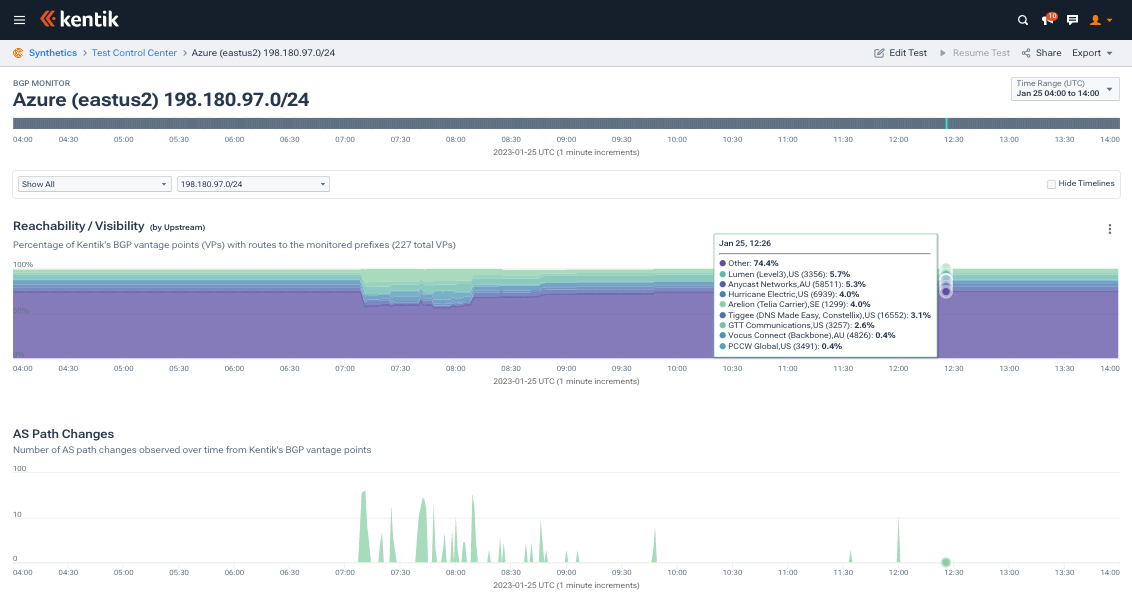

Not every Microsoft prefix experienced such a cut-and-dry outage. Consider 198.180.97.0/24 used in eastus2 pictured below. The upper graphic is a stacked plot that conveys how the internet reaches AS8075 for this prefix over time. As is common with cloud providers, AS8075 is a highly peered network, meaning much of the internet reaches it via a myriad of peering relationships.

Since each of those relationships will be seen by only a few BGP vantage points, they get grouped together in the Other category (deep purple band above). Once the problems start at 07:09 UTC, we can see spikes in the AS Path Changes timeline indicating that something is happening with 198.180.97.0/24.

At that time the share of Other decreases in the stacked plot, meaning many networks on the internet are losing their peering with Microsoft. As Other decreases, AS8075’s transit providers (Arelion, Lumen, GTT, and Vocus) see their share increase as the internet is shifting to reach AS8075 via transit, having lost a portion of its peering links to Microsoft. This is consistent with what we were seeing in the NetFlow from our service provider customers in Asia in the previous section.

Azure outage’s BGP hijacks

The most curious part of this outage is that AS8075 appeared to begin announcing a number of new prefixes, some of which are hijacks of routed address space belonging to other networks. At the time of this writing, these routes are still in circulation.

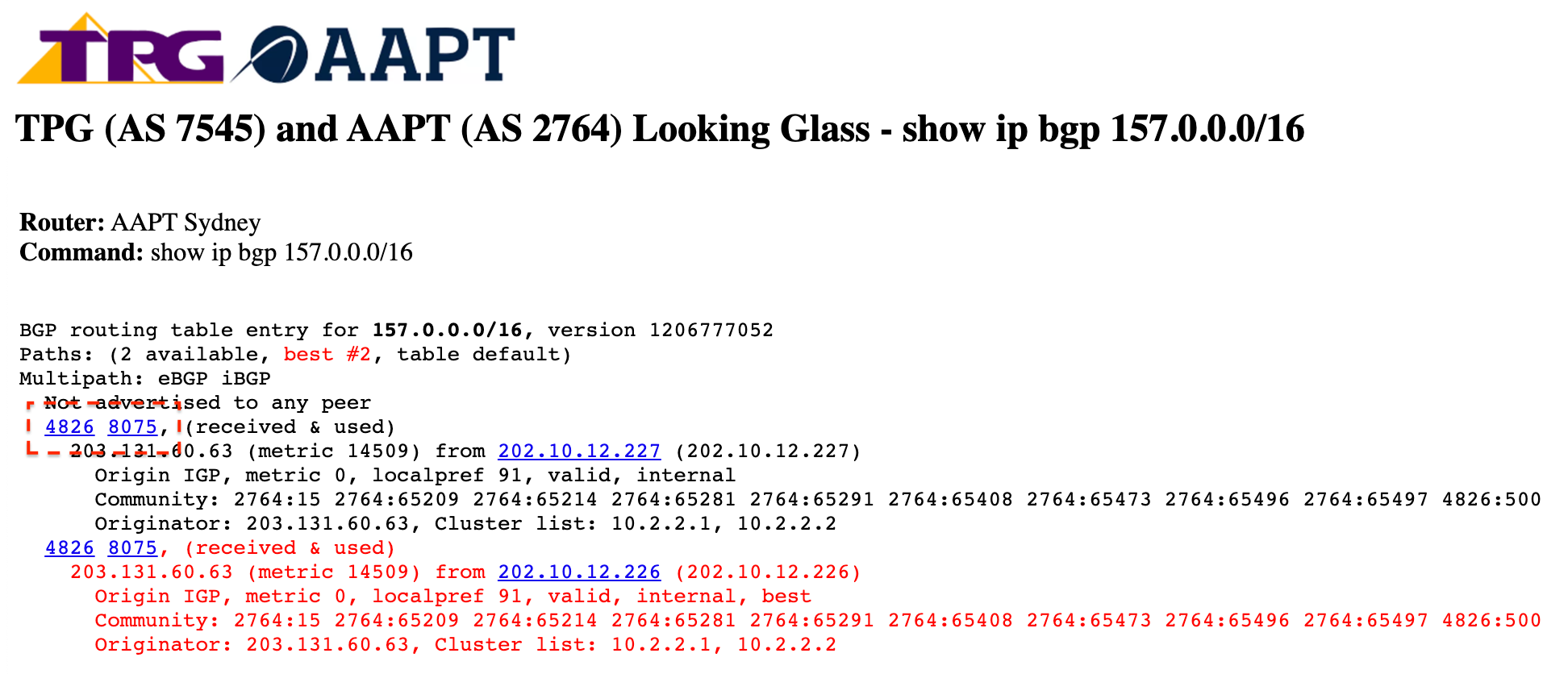

Between 07:42 and 07:48 UTC on January 25th, 157.0.0.0/16, 158.0.0.0/16, 159.0.0.0/16, and 167.0.0.0/16 appeared in the global routing table with the following AS path: … 2764 4826 8075.

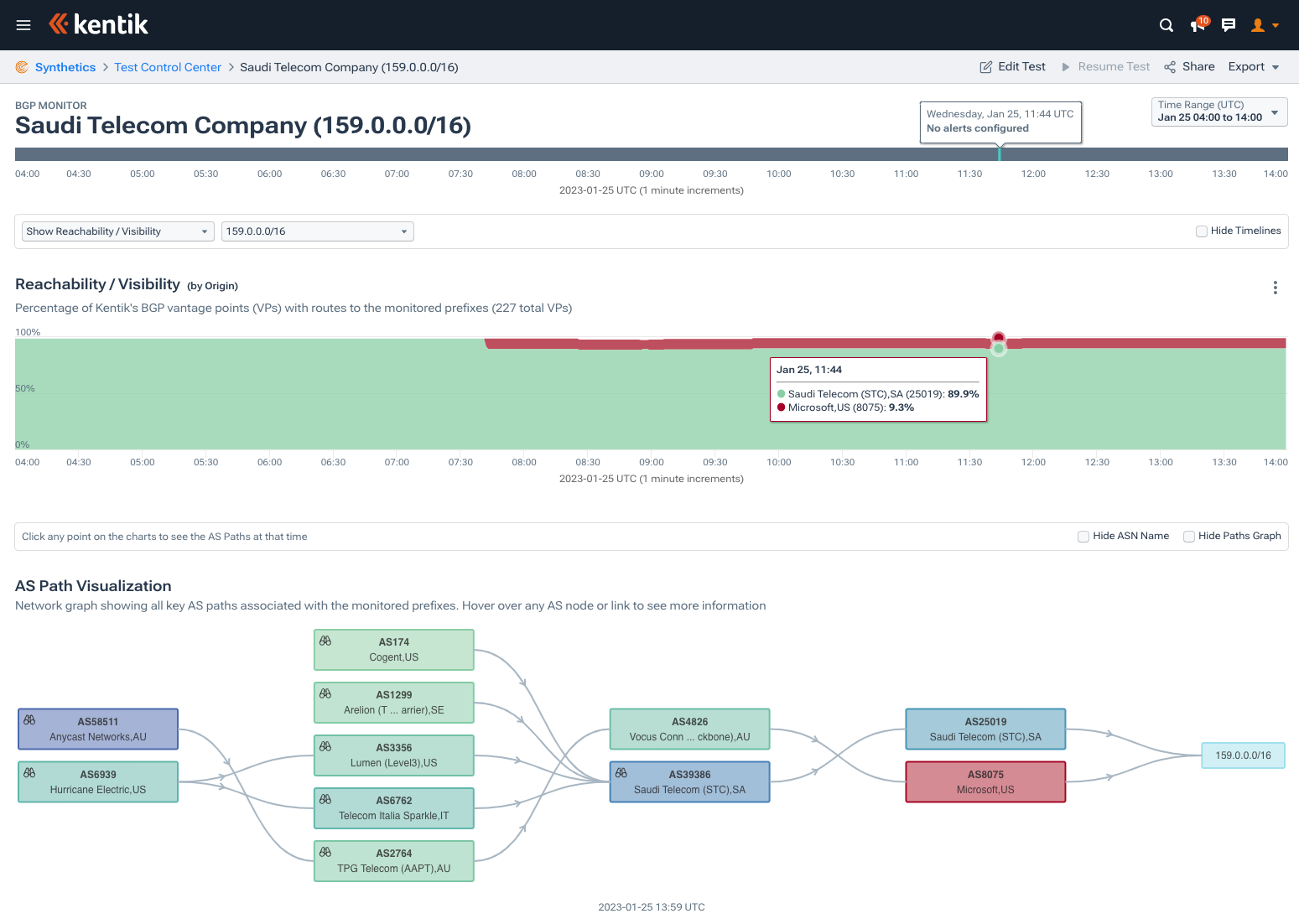

AS2764 and AS4826 belong to the Australian telecoms AAPT and Vocus, respectively. The problem is that these routes belong to China Unicom, the US Department of Defense and Saudi Telecom Company (STC), and were already being originated by AS4837, AS721 and AS25019, respectively. 167.0.0.0/16 is presently unrouted, but belongs to Telefónica Colombia.

Below is Kentik’s BGP visualization showing the 9.3% of our BGP vantage points see AS8075 as the origin of this prefix belonging to the Saudi Arabian incumbent.

Shortly afterwards, AS8075 also began appearing as the origin of several other large ranges, including 70.0.0.0/15 (more-specific of T-Mobile’s 70.0.0.0/13). All of these routes contain AS paths ending with “2764 4826 8075”, and, for the time being, they have largely remained within Australia, limiting the disruption that they might have otherwise caused.

What is strange is that despite AS4826 being in the AS path, Vocus does not report these routes in their looking glass. Meanwhile over on AAPT’s looking glass, it reports the routes as coming from Vocus (AS4826):

We contacted Microsoft and Vocus and they are actively working to remove these problematic BGP routes.

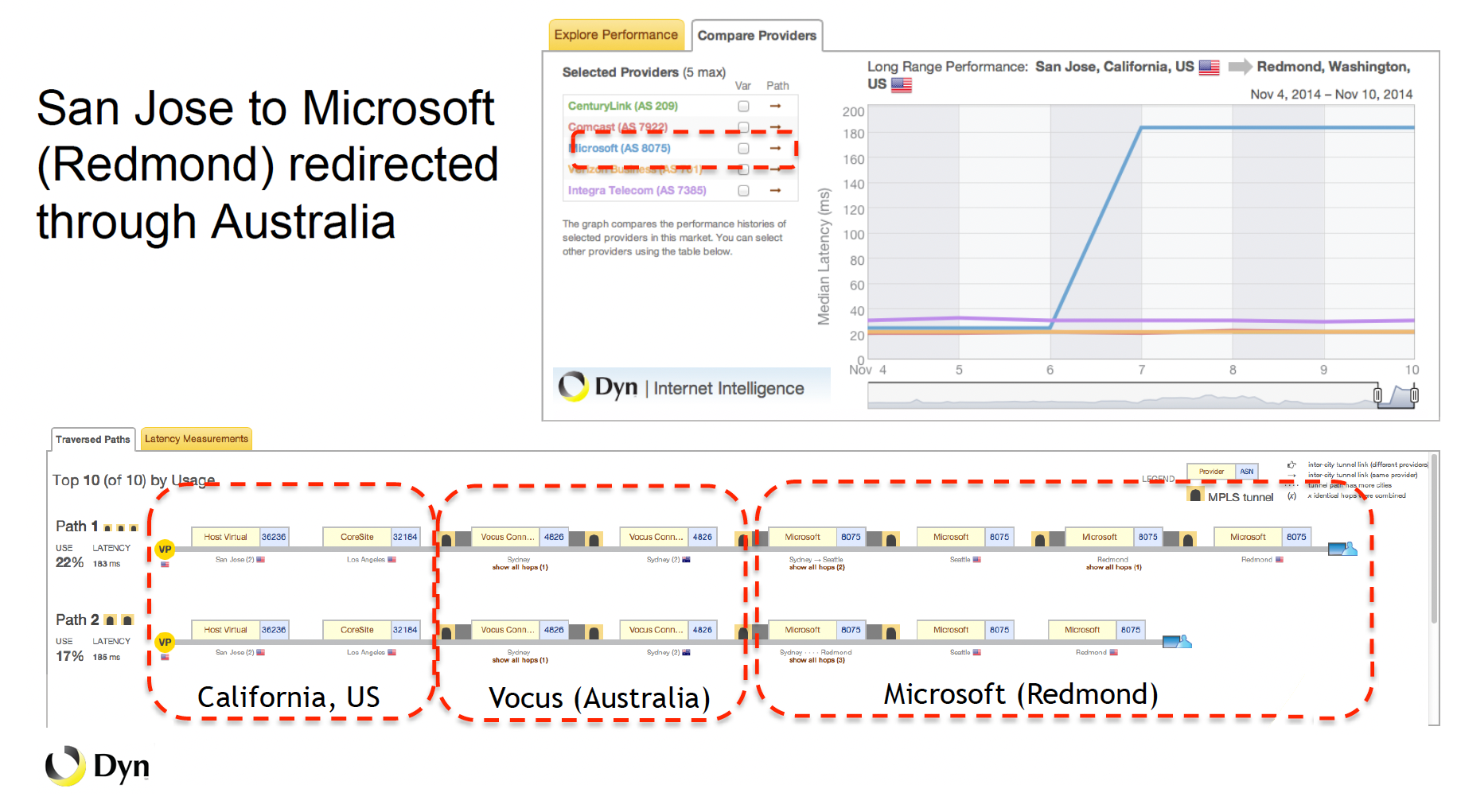

This isn’t the first routing snafu involving Microsoft and an Australian provider. In a presentation on routing leaks I gave at NANOG 63 eight years ago to the day, I described a situation where Microsoft had mistakenly removed the BGP communities that prevented the routes they announced through Vocus from propagating back to the US. The result was that for six days, traffic from users in the US going to Microsoft’s US data centers was routed through Australia, incurring a significant latency penalty.

Conclusion

Following the outage, Microsoft published a Post Incident Review which summarized the root cause in the following paragraph:

As part of a planned change to update the IP address on a WAN router, a command given to the router caused it to send messages to all other routers in the WAN, which resulted in all of them recomputing their adjacency and forwarding tables. During this re-computation process, the routers were unable to correctly forward packets traversing them.

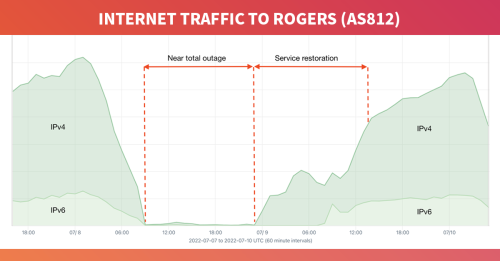

Reminiscent of the historic Facebook outage of 2021, a flawed router command appears to have taken down a portion of Azure’s WAN links. If I understand their explanation correctly, the command triggered an internal update storm. This is similar to what happened during the Rogers outage of summer 2022 when an internal routing leak overwhelmed their routers causing them to drop traffic. As was the case with the Rogers outage, the instability we can observe in BGP was likely a symptom, not the cause of this outage.

Managing the networking at a major public cloud like Microsoft’s Azure is no simple task, nor is analyzing their outages when they occasionally occur. Understanding all of the potential impacts and dependencies in a highly complex computing environment is one of the top challenges facing networking teams in large organizations today.

It is these networking teams that we think about at Kentik. The key is having the ability to answer any question from your telemetry tooling; this is a core tenet to Kentik’s approach on network observability.