Kentik Blog

Can BGP routing tables provide actionable insights for both engineering and sales? Kentik Detect correlates BGP with flow records like NetFlow to deliver advanced analytics that unlock valuable knowledge hiding in your routes. In this post, we look at our Peering Analytics feature, which lets you see whether your traffic is taking the most cost-effective and performant routes to get where it’s going, including who you should be peering with to reduce transit costs.

This week, Verizon was dubbed a winner of network speeds. Mitel agreed to buy ShoreTel. Viacom said it wouldn’t buy Scripps. Packet grew globally. The Ericsson-Cisco partnership slowed. And feature articles highlighted Cuba’s internet and IT automation costs. More headlines after the jump…

Among Kentik Detect’s unique features is the fact that it’s a high-performance network visibility solution that’s available as a SaaS. Naturally, data security in the cloud can be an initial concern for many customers, but most end up opting for SaaS deployment. In this post we look at some of the top factors to consider in making that decision, and why most customers conclude that there’s no risk to taking advantage of Kentik Detect as a SaaS.

Google announced a new algorithm for higher bandwidths and lower latencies. NASA gave advice on IoT networks. Python is the most popular programming language. And Dow Jones is the latest to see an S3 misconfiguration.

What do summer blockbusters have to do with network operations? As utilization explodes and legacy tools stagnate, keeping a network secure and performant can feel like a struggle against evil forces. In this post we look at network operations as a hero’s journey, complete with the traditional three acts that shape most gripping tales. Can networks be rescued from the dangers and drudgery of archaic tools? Bring popcorn…

We’re very excited to share that KDDI, one of the world’s largest telecommunications companies, has selected the Kentik platform for network planning, operations, and to protect their key network assets and services. Read about the reasons that KDDI chose Kentik and why we’re so excited about this announcement.

Out with the old, in with the new, or so it seems this week. Apple has a new data center, its first in China. Intel announced a new line of microprocessors. Ericsson announced a new network services suite for IoT. Also this week, Kentik’s survey from Cisco Live reveals network challenges affecting digital transformation. More after the jump.

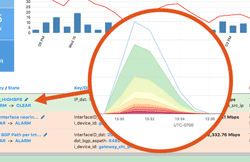

Operating a network means staying on top of constant changes in traffic patterns. With legacy network monitoring tools, you often can’t see these changes as they happen. Instead you need a comprehensive visibility solution that includes real-time anomaly detection. Kentik Detect fits the bill with a policy-based alerting system that continuously evaluates incoming flow data. This post provides an overview of system features and configuration.

As one of 2017’s hottest networking technologies, SD-WAN is generating a lot of buzz, including at last week’s Cisco Live. But as enterprises rely on SD-WAN to enable Internet-connected services — thereby bypassing Carrier MPLS charges — they face unfamiliar challenges related to the security and availability of remote sites. In this post we take a look at these new threats and how Kentik Detect helps protect against and respond to attacks.

Verizon’s deal with Yahoo may still be on the minds of many, but Disney may be Verizon’s new big brand-of-interest. Also on acquisitions, Forrester’s CEO says Apple should buy IBM. Juniper Research released a list of the most promising 5G operators in Asia. And the UN released a list of countries with cybersecurity gaps. More after the jump…