Kentik Blog

Whether its 70s variety shows or today’s DDoS attacks, high-profile success begets replication. So the recent attack on Dyn by Mirai-marshalled IoT botnets won’t be the last severe disruption of Internet access and commerce. Until infrastructure stakeholders come together around meaningful, enforceable standards for network protection, the security and prosperity of our connected world remains at risk.

DDoS attacks pose a serious and growing threat, but traditional DDoS protection tools demand a plus-size capital budget. So many operators rely instead on manually-triggered RTBH, which is stressful, time-consuming, and error-prone. The solution is Kentik’S automated RTBH triggering, based on the industry’s most accurate DDoS detection, that sets up in under an hour with no hardware or software install.

Can legacy DDoS detection keep up with today’s attacks, or do inherent constraints limit network protection? In this post Jim Frey, Kentik VP Strategic Alliances, looks at how the limits of appliance-based detection systems contribute to inaccuracy — both false negatives and false positives — while the distributed big data architecture of Kentik Detect significantly enhances DDoS defense.

There’s a horrible tragedy taking place every day wherever legacy visibility systems are deployed: the needless slaughter of innocent NetFlow data records. NetFlow is cruelly disposed of, and the details it can reveal are blithely ignored in favor of shallow summaries. Kentik VP of Marketing Alex Henthorn-Iwane reports on this pressing issue and explains what each of us can do to help Save the NetFlow.

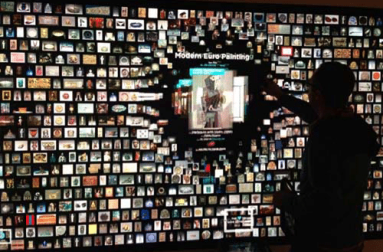

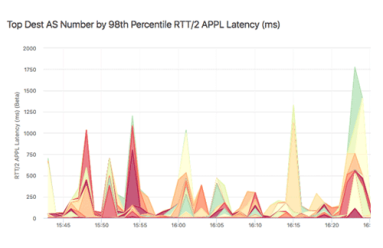

In this post, based on a webinar presented by Jim Frey, VP Strategic Alliances at Kentik, we look at how changes in network traffic flows — the shift to the Cloud, distributed applications, and digital business in general — has upended the traditional approach to network performance monitoring, and how NPM is evolving to handle these new realities.

Kentik recently launched Kentik NPM, a network performance monitoring solution for today’s digital business. Integrated into Kentik Detect, Kentik NPM addresses the visibility gaps left by legacy appliances, using lightweight software agents to gather performance metrics from real traffic. This podcast with Kentik CEO Avi Freedman explores Kentik NPM as a game-changer for performance monitoring.

As Gartner Research Director Sanjit Ganguli pointed out last May, network performance monitoring appliances that were designed for the era of WAN-connected data centers leave significant blind spots when monitoring modern applications that are distributed across the cloud. In this post Jim Frey, VP Strategic Alliances, explores Ganguli’s analysis and explains how Kentik NPM closes the gaps.

Network performance is mission-critical for digital business, but traditional NPM tools provide only a limited, siloed view of how performance impacts application quality and user experience. Solutions Engineer Eric Graham explains how Kentik NPM uses lightweight distributed host agents to integrate performance metrics into Kentik Detect, enabling real-time performance monitoring and response without expensive centralized appliances.