Summary

Last month at DENOG11 in Germany, Kentik Site Reliability Engineer Costas Drogos talked about the SRE team’s journey during the last four years of growing Kentik’s infrastructure to support thousands of BGP sessions with customer devices on Kentik’s multi-tenant SaaS (cloud) platform.

Last month at DENOG11 in Germany, Kentik Site Reliability Engineer Costas Drogos talked about the SRE team’s journey during the last four years of growing Kentik’s infrastructure to support thousands of BGP sessions with customer devices on Kentik’s multi-tenant SaaS (cloud) platform. Costas shared various challenges the team overcame, the actions the team took, and finally, key takeaways.

BGP at Kentik

Costas started off by giving a short introduction to how Kentik uses BGP, in order to develop the technical requirements, which include:

- Kentik peers with customers, preferably with every BGP-speaking device that sends flows to our platform

- Kentik’s capabilities act like a totally passive iBGP route-reflector (i.e. we never initiate connections to the customers) on servers running Debian GNU/Linux.

- BGP peering uptime is part of Kentik’s contracted SLA - 99.99%

At Kentik, we use BGP data not only to enrich flow data so we can filter by BGP attributes in queries, but we also calculate lots of other analytics with routing data. For example, you can see how much of your traffic is associated with RPKI invalid prefixes; you can do peering analytics; if you have multiple sites, you can see how traffic gets in and out of your network (Kentik Ultimate Exit™); and eventually, perform network discovery. Moreover, each BGP session can be used as the transport to push mitigations, such as RTBH and Flowspec, triggered by alerting from the platform.

Scaling phases

Costas then shared how the infrastructure has been built out from the beginning to today as Kentik’s customer base has grown.

Phase 1 - The beginning

Back in 2015, when we monitored approximately 200 customer devices, we started with 2 nodes in active/backup mode. The 2 nodes were sharing a floating IP that handles the HA/Failover, which is managed by ucarp — an open BSD CARP (VRRP) implementation. This setup ran at boot time from a script residing in /root via rc.local.

Obviously, this setup didn’t go very far with the rapid growth of BGP sessions. After a while, one active node could no longer handle all peers, with the node getting overutilized in terms of memory and CPU. With Kentik growing quickly, the solution needed to evolve.

Phase 2

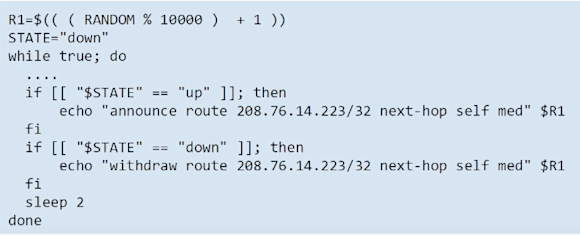

In order to fit more peers, we had to add extra BGP nodes. Looking at our setup, the first thing we did was to replace ucarp because we observed scaling issues with more than 2 nodes. We developed a home-grown shell script (called ‘bgp-vips’) that communicates with a spawned exaBGP. This took care of announcing our floating BGP IP, which was now provisioned on the host’s loopback interface. Each host then announced the route with a different MED so that we had multiple paths available at all times.

The next big step was to scale out the actual connections, by allowing them to land on different nodes. On top of that, since our BGP nodes were identical, the distribution of sessions should be balanced. Given that we only have one IP active in on each node, the next step was to have this landing node act as a router for inbound BGP connections with policy routing as the high-level design. The issue we then had to think about was how to achieve a uniform enough distribution. After testing multiple setups, we ended up using wildcard masks as the sieve to mark connections with.

While we were able to scale connections and achieved a mostly uniform distribution among the peering nodes (example below), our setup was still not really IPv6 ready and needed full exaBGP restarts upon any topology modification, resulting in BGP flaps for customers.

On top of that, we introduced RTBH for DDoS mitigation, which immediately raised the importance of having a stable BGP setup — as we are now actively protecting customers’ networks.

With the fast growth of Kentik, when we hit the 1,300-peers mark, a few more issues surfaced:

- Mask-based hashing was not optimal anymore;

- The home-grown shell script ‘bgp-vips’ was painful to work with day-to-day: modifying MEDs, health-checks and the smallest topology change warranted a full restart, dropping all connections.

- IPv6 peerings are starting to outgrow a single node.

Phase 3

Improvement of the Phase 2 setup became imperative. Customers were being onboarded so rapidly that the only way forward was continued innovation.

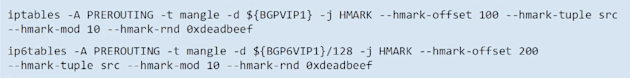

- The first thing to optimize was traffic distribution, to achieve better node utilization. We replaced mask-based routing with hash-based routing (HMARK). This offered us greater stability and, due to hashes having higher entropy than IPs, uniform-enough distribution. It also allowed mirroring the setup for our IPv6 fabric.

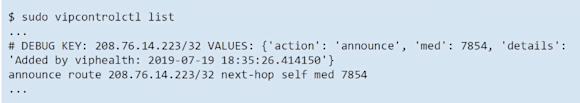

- The second thing to improve on was our BGP daemon. We replaced the ‘bgp-vips’ shell scripts with a real daemon in python, called ‘vipcontrol’, that communicates with a side-running exaBGP over a socket. With this new ‘vipcontrol’ daemon, we can now modify runtime configuration and change MEDs on the fly. Another sidecar daemon, called ‘viphealth’ took care of health-checking the processes, handling IPs and modifying MEDs as needed.

In the meantime, Kentik introduced Flowspec DDoS mitigations, so offering stable BGP sessions became even more important for Kentik.

Phase 4 - Today

Today, Kentik continues to grow, peering with more than 4,000 customer devices. As before, we designed the next phase in the spirit of continuous improvement. We decided to create a new design, building on previous experience. We started by setting the requirements, including that we:

- Should support both IPv4 and IPv6

- Keep the traffic distribution uniform as our nodes are all identical

- Be able to scale horizontally to accommodate future growth demands

- Persist all the states in our configuration management code tree

- Simplify day-to-day operations such as adding a node, removing a node, and code deploys — and keep all these operations transparent to customers

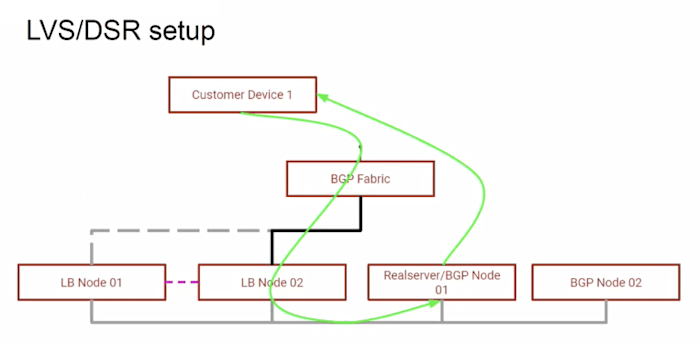

We tested different designs during an evaluation cycle and we decided to go with LVS/DSR (Linux Virtual Server / Direct Server Return), which is a load-balancing setup traditionally used for website load balancers, but it worked well for BGP connections, too.

Here is how it works:

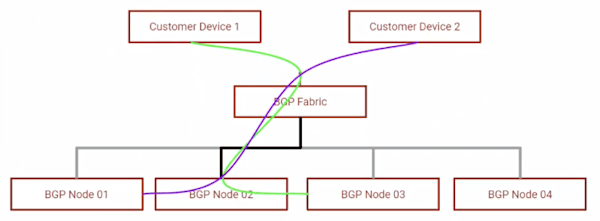

- A customer device initiates a connection.

- The floating IP gets announced to the BGP fabric.

- It’s then passed on to the load balancer node (which doesn’t run BGP code).

- The load balancer node rewrites the source MAC of the packet and forwards the packet to the real server that’s running the BGP code.

- The real server terminates the connection by replying directly to the customer (so we are not passing both directions of the TCP connection through the load balancing node).

Under the hood, the new design utilized the following:

- Replaced exaBGP with BIRD (BIRD Internet Routing Daemon) and added BFD on top for faster route failover. Bird is used to announce the floating IP into Kentik’s fabric

- Used Keepalived in LVS mode with health checks for pooling/depooling real servers

- Load balancing nodes utilize IPVS to sync connection state - so that if a load balancing node fails, TCP connections remain intact as they move to the other load balancing node

- All of the configuration is set in Puppet+git, so that we can follow any changes that go into the whole configuration

Today, we’re testing the new setup in our staging environment, evaluating the pros and cons and tuning it to ensure it’s going to meet future scaling requirements as we begin to support tens of thousands of BGP connections.

Recap

With the rapid growth of Kentik, in the past four-years’ journey, we evolved the backend for our BGP route ingestion in four major phases to meet scaling requirements and improve our setup’s reliability:

- 2 nodes in active-backup, using ucarp

- 4 active nodes with mask-based hashing, using exaBGP in our HA setup

- 10 active nodes with full-tuple hashing, support for balancing IPv6

- 16+ nodes with LVS/DSR and IPVS now under testing.

To watch the complete talk from DENOG, please check out this YouTube video.

To learn more about Kentik, sign up for a free trial or schedule a demo with us.