Network Performance Monitoring (NPM): Importance, Benefits, and Solutions

Network performance monitoring (NPM) is essential for understanding and optimizing the performance of today’s increasingly complex digital networks. This fundamental process helps NetOps teams detect and resolve issues proactively, optimize overall network performance, and ensure superior end-user experiences. Choosing the best network performance monitoring tool requires NetOps professionals to understand the complexities of today’s cloud-based networks and the evolving capabilities of network monitoring solutions.

What is Network Performance Monitoring (NPM)?

Network performance monitoring (NPM) is the process of measuring, diagnosing, and optimizing the service quality of a network as experienced by users. Network performance monitoring tools combine various types of network data (for example, packet data, network flow data, metrics from multiple types of network infrastructure devices, and synthetic tests) to analyze a network’s performance, availability, and other important metrics.

NPM solutions may enable real-time, historical, or even predictive analysis of a network’s performance over time. NPM solutions can also play a role in understanding the quality of end-user experience using network performance data—especially data gathered from active, synthetic testing (in contrast to passive forms of network performance monitoring such as packet or flow data collection).

Why is Network Performance Monitoring Important?

Network Performance Monitoring (NPM) is essential for ensuring network reliability, optimizing performance, and enhancing security. NPM tools help detect issues proactively, leading to reduced downtime and improved user experience. NPM can also provide insights for resource allocation and network capacity planning, which lowers costs. NPM also identifies unusual traffic patterns that may indicate security threats, allowing for quick intervention. NPM solutions ensure that networks are efficient, resilient, and secure, with a goal of ensuring business success and user satisfaction.

Key Network Performance Monitoring Metrics and Data

NPM requires multiple types of measurement or monitoring data on which engineers can perform diagnoses and analyses. Example categories of network performance metrics are:

-

Bandwidth: Measures the raw versus available maximum rate that information can be transferred through various points of the network or along a network path. (Learn more about bandwidth utilization monitoring.)

-

Throughput: Measures how much information is being or has been transferred.

-

Latency: Measures network delays from the perspective of network devices such as clients, servers, and applications. Latency is often measured as round trip time, which includes the time for a packet to travel from its source to its destination and for the source to receive a response.

-

Packet Loss: Measures the percentage of data packets that fail to reach their intended destination. High packet loss can lead to higher latency, as the sender must retransmit the lost packets, causing additional delays in data transmission.

-

Jitter: Measures the inconsistency of data packet arrival intervals or the variation in latency over time.

-

Errors: Measures raw numbers and percentages of errors, such as bit errors, TCP retransmissions, and out-of-order packets.

See how IT professionals responded to the EMA survey about their approach to managing, monitoring, and troubleshooting their networks.

NPM vs. NPMD

NPM solutions are sometimes referred to as “Network Performance Monitoring and Diagnostics” (NPMD) solutions. Most notably, industry analyst firm Gartner calls this the NPMD market, which it defines (in the 2020 Market Guide for Network Performance Monitoring and Diagnostics) as “tools that leverage a combination of data sources. These include network-device-generated health metrics and events; network-device-generated traffic data (e.g., flow-based data sources); and raw network packets that provide historical, real-time, and predictive views into the availability and performance of the network and the application traffic running on it.”

Network Performance Monitoring Data Collection

Network performance monitoring has traditionally relied on data sources such as SNMP polling, traffic flow record export, and packet capture (PCAP) appliances to understand the status of network devices. A host monitoring agent combined with a SaaS/big data back-end model provides an additional, more cloud-friendly approach. Modern NPM solutions also provide the ability to ingest and analyze cloud flow logs created by cloud-based systems (such as AWS, Azure, Google Cloud, etc.).

SNMP Polling

SNMP (Simple Network Management Protocol) is an IETF standard protocol, the most common method for gathering total bandwidth, CPU/memory utilization, available bandwidth, error measurements, and other network device-specific information on a per-interface basis. SNMP uses a polling-based approach via management information bases (MIBs) such as the standards-based SNMP MIB-II for TCP/IP-based networks. Typically, large networks only poll in five-minute intervals to avoid overloading the network with management data. A downside of SNMP polling is the lack of granularity since multi-minute polling intervals can mask the bursty nature of network data flows, and interface counters only provide an interface-centric view.

Streaming Telemetry

Streaming telemetry is sometimes described as the next evolutionary step from SNMP in network monitoring metrics collection. It differs from SNMP in how it works, if not in the information it provides. Streaming telemetry solves some of the problems inherent in the polling-based approach of SNMP.

The various forms of streaming telemetry, such as gNMI, structured data approaches (like YANG), and other proprietary methods, offer near-real-time data from network devices. Instead of waiting minutes for the subsequent polling to occur, network administrators get information about their devices in near real-time.

Because data is pushed in real-time from the devices and not polled at prescribed intervals, streaming telemetry can provide far higher-resolution data compared to SNMP. This push-based model is generally more efficient than SNMP. Streaming telemetry processing often happens in hardware at the ASIC itself instead of on the device’s CPU. As a result, it can scale in more extensive networks without affecting the performance of individual network devices. (See our blog post for a more in-depth look at streaming telemetry vs SNMP.)

Traffic Flow Record Export

Traffic flow records are generated by routers, switches, and dedicated software programs by monitoring key statistics for uni-directional “flows” of packets between specific source and destination IP addresses, protocols (TCP, UDP, ICMP), port numbers and ToS (plus other optional criteria). Every time a flow ends or hits a pre-configured timer limit, the flow statistics gathering is stopped, and those statistics are written to a flow record, which is sent or “exported” to a flow collector server.

There are several flow collection standards, including NetFlow, sFlow and IPFIX. NetFlow is the trade version created by Cisco and has become a de facto industry standard. sFlow and IPFIX are multi-vendor standards, one governed by InMon and the other specified by the Internet Engineering Task Force (IETF).

Advantages of NetFlow as Part of Network Performance Monitoring

Flow records are far more voluminous than SNMP records but provide valuable details on actual traffic flows. The statistics from flow records can be utilized to create a picture of actual throughput. Flow information can also be used to calculate interface utilization by reference to total interface bandwidth. Additionally, since flow data must include source and destination IP addresses, it is possible to map recorded flows to routing data such as BGP routing internet paths. This data integration is highly valuable for network performance monitoring because the network or internet path may correlate to performance problems occurring in particular networks (known as Autonomous Systems in BGP parlance) that comprise an internet path.

Network Flow Sampling

NetFlow records statistics based only on the packet headers—and not on any packet data payload contents—so the information is metadata rather than payload data. While it is possible to measure every flow, most practical network implementations use some degree of “sampling” where the NetFlow exporter only monitors one in a thousand or more flows. Sampling limits the fidelity of NetFlow data, but in a large network, even 1:8000 sampling is considered statistically accurate for network performance management purposes.

VPC/Cloud Flow Logs

Like flow records generated by network infrastructure components, cloud-based applications, systems, and virtual private clouds can also export network flow data. For example, in AWS (Amazon Web Services), virtual private clouds can be configured to capture and export “VPC Flow Logs” which provide information about the IP traffic going to and from network interfaces in a given VPC.

As in NetFlow-type sampling, VPC Flow Logs record a sample of network flows sent from and received by various cloud infrastructure components (such as instances of virtual machines, Kubernetes nodes, etc.). These network flows can be ingested by an NPM solution to provide network monitoring and analytics for cloud-based networks.

Packet Capture (PCAP) Software and Appliances

Packet capture involves recording every packet that passes across a particular network interface. With PCAP data, the information collected is granular since it includes both packet headers and full payload. Since an interface will see packets going in and out, PCAP can precisely measure latency between an outbound packet and its inbound response, for example. PCAP provides the richest source of network performance data.

PCAP can be performed using open-source network utilities such as tcpdump and Wireshark on an individual server. This can be a very effective way to understand network performance issues for a skilled technician. However, since it is a manual process requiring fairly in-depth knowledge of the utilities, it is not scalable.

To improve on this manual approach, an appliance-based PCAP probe may be used. The probe has multiple interfaces connected to router or switch span ports or to an intervening packet broker device (such as those offered by Gigamon or Ixia). In some cases, virtual probes can be used, but they depend on network links in one form or another.

Limitations of Packet Capture Appliances

A major downside to PCAP appliances is the expense of deployment. Physical and virtual appliances are costly from a hardware and (in the case of commercial solutions) software licensing point of view. As a result, in most cases, it is only fiscally feasible to deploy PCAP probes to relatively few selected points in the network. In addition, the appliance deployment model was developed based on pre-cloud assumptions of centralized data centers of limited scale, holding relatively monolithic application instances.

As cloud and distributed application models have proliferated, the appliance model for packet capture is less feasible because of the wide distribution of application components in VMs or containers. In many cloud hosting environments, there is no way to even deploy a virtual appliance.

Host Agent Network Performance Monitoring

A cloud-friendly and highly scalable model for network performance monitoring combines the deployment of lightweight host-based monitoring agents that export PCAP-based statistics gathered on servers and open-source proxy servers such as HAProxy and NGNIX. Exported statistics are sent to a SaaS repository that scales horizontally to store unsummarized data and provides big data-based analytics for alerting, diagnostics, and other use cases.

While host-based performance metric export doesn’t provide the full granularity of raw PCAP, it provides a highly scalable and cost-effective method for ubiquitously gathering, retaining, and analyzing critical performance data, and thus complements PCAP. An example of a host-based NPM agent is Kentik’s kprobe.

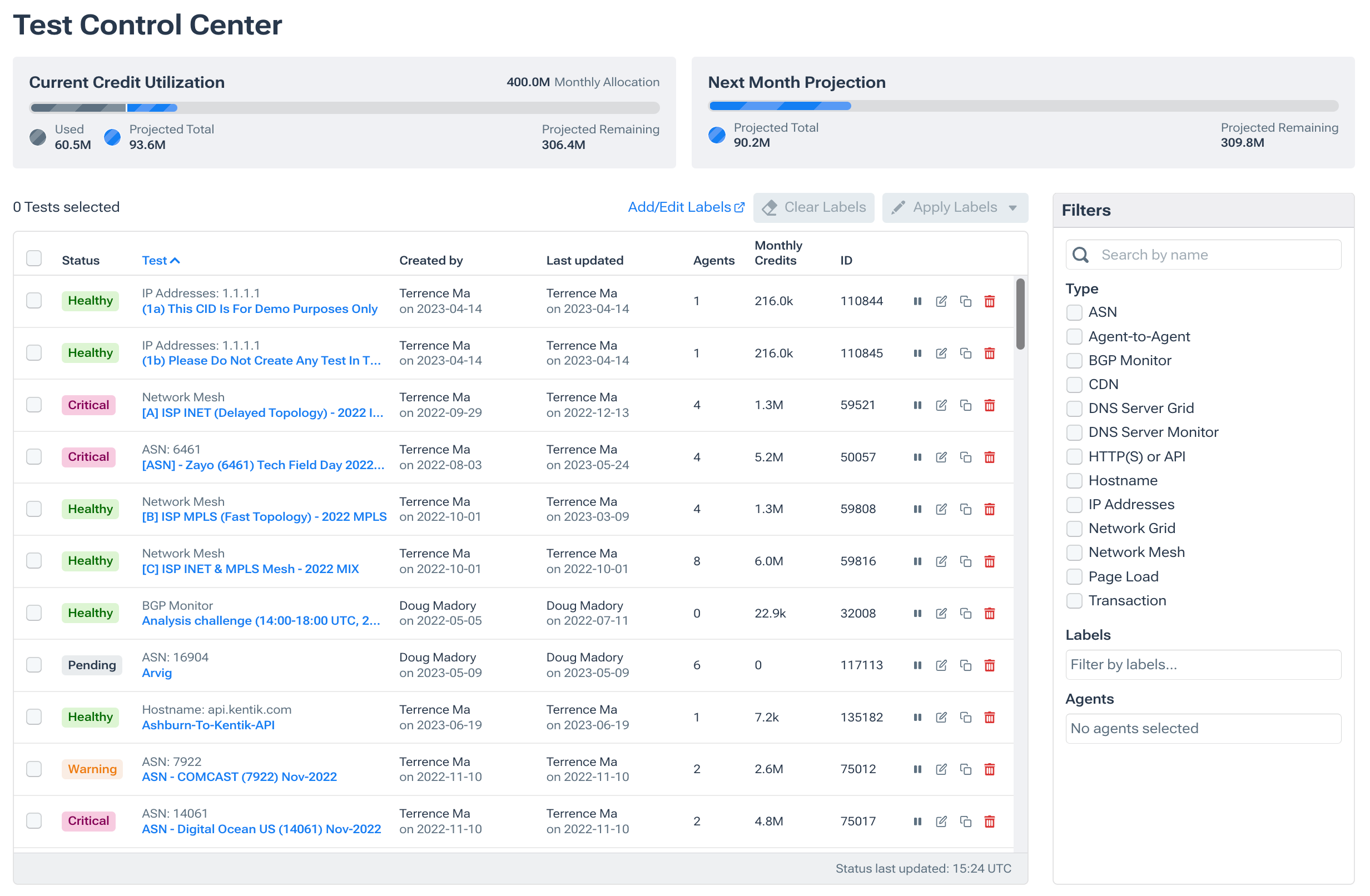

Synthetic Monitoring and Synthetic Testing

Modern Network Performance Monitoring solutions increasingly incorporate synthetic monitoring features, which are traditionally associated with a process/market called “Digital Experience Monitoring”. In contrast to flow or packet capture (which we might characterize as passive forms of monitoring), synthetic monitoring is a means of proactively tracking the performance and health of networks, applications, and services.

In the networking context, synthetic monitoring means imitating different network conditions and/or simulating differing user conditions and behaviors. Synthetic monitoring achieves this by generating various types of traffic (e.g., network, DNS, HTTP, web, etc.), sending it to a specific target (e.g., IP address, server, host, web page, etc.), measuring metrics associated with that “test” and then building KPIs using those metrics.

(See also: “What is Synthetic Transaction Monitoring”.)

Other Integrations Important to NPM and Network Observability

NPM data sources are not limited to the abovementioned types and may encompass many events, device metrics, streaming telemetry, and contextual information. In this short video, Kentik CEO Avi Freedman discusses the many types of data and integrations that are important to improving network observability.

This video is a brief excerpt from “5 Problems Your Current Network Monitoring Can’t Solve (That Network Observability Can)”—you can watch the entire presentation here.

What are the Benefits of Network Performance Monitoring?

Network performance monitoring tools provide numerous benefits for NetOps professionals and organizations. These benefits improve network visibility, reliability, and efficiency, ultimately leading to better user experiences and business outcomes. Key benefits of NPM include:

-

Proactive issue detection: NPM tools enable early identification of network problems, allowing teams to address and resolve issues before they escalate and impact end users or critical business operations.

-

Faster network troubleshooting: With comprehensive visibility into network traffic and performance data, NetOps professionals can more efficiently pinpoint the root cause of network issues, reducing the time it takes to resolve problems and minimizing network downtime.

-

Optimized network performance: NPM solutions provide insights into network bottlenecks, latency, and other performance-related issues, enabling organizations to optimize their networks for better performance and user experiences.

-

Capacity planning and resource allocation: By monitoring network utilization and performance trends, NPM tools help organizations make informed decisions about capacity planning, resource allocation, and infrastructure investments to meet current and future demands.

-

Enhanced security: NPM solutions can help identify unusual traffic patterns, potential DDoS attacks, and other network security threats, allowing organizations to take swift action to protect their networks and data.

-

Improved end-user experience: Proactively monitoring and optimizing network performance ultimately leads to better end-user experiences, which can have a direct impact on customer satisfaction, employee productivity, and overall business success.

Network Performance Monitoring Challenges

Despite the many benefits of network performance monitoring, there are also challenges that NetOps professionals and organizations must contend with. Some of the key challenges include:

-

Complexity and scale: Modern networks are increasingly complex, spanning on-premises, cloud, hybrid environments, and container networking environments (e.g., Kubernetes). Managing and monitoring performance across these diverse and often large-scale networks can be challenging, particularly as organizations adopt new technologies and services.

-

Data overload: Network monitoring systems generate vast amounts of data from multiple sources, including flow records, packet capture, and host agents. Managing and analyzing this data to extract meaningful insights can be overwhelming, requiring the right tools and expertise.

-

Integration with other tools and systems: Network monitoring tools must often integrate with many other network management tools, security systems, and IT infrastructure components. Ensuring seamless integration and data sharing between these disparate systems can be challenging.

-

Cost and resource constraints: Deploying and maintaining network performance monitoring solutions can be expensive, particularly for large-scale networks or when using appliance-based packet capture probes. Organizations must balance the need for comprehensive network monitoring and visibility with cost and resource constraints.

-

Keeping pace with technological advancements: As network technologies evolve, network monitoring tools and methodologies must keep pace. NetOps professionals must stay informed about the latest developments and best practices in network performance monitoring to ensure their organizations remain agile and competitive.

Network Performance Monitoring Best Practices

To maximize the value of network performance monitoring and overcome its challenges, NetOps professionals should consider adopting the following best practices:

-

Leverage multiple data sources: Utilize a variety of data sources, including SNMP polling, traffic flow records, packet capture, host agents, and synthetic monitoring, to gain a comprehensive view of network performance.

-

Establish performance baselines: Determine baseline performance metrics for your network to identify deviations and potential issues quickly. Regularly review and update these baselines as your network evolves.

-

Implement proactive monitoring and alerting: Set up proactive monitoring and alerting to detect performance issues before they impact end users or business operations. Fine-tune alert thresholds to minimize false positives and ensure timely notifications.

-

Invest in scalable, cloud-friendly NPM solutions: Choose network performance monitoring tools that can scale to meet the growing needs of your entire network and are compatible with cloud-based, multicloud, and hybrid environments.

-

Continuously review and optimize: Network performance monitoring should be an ongoing, iterative process. As the network environment changes, network administrators should continuously review and adjust monitoring strategies, baselines, and optimization efforts to ensure the network remains in optimal condition.

About Kentik’s Network Performance Monitoring Solutions

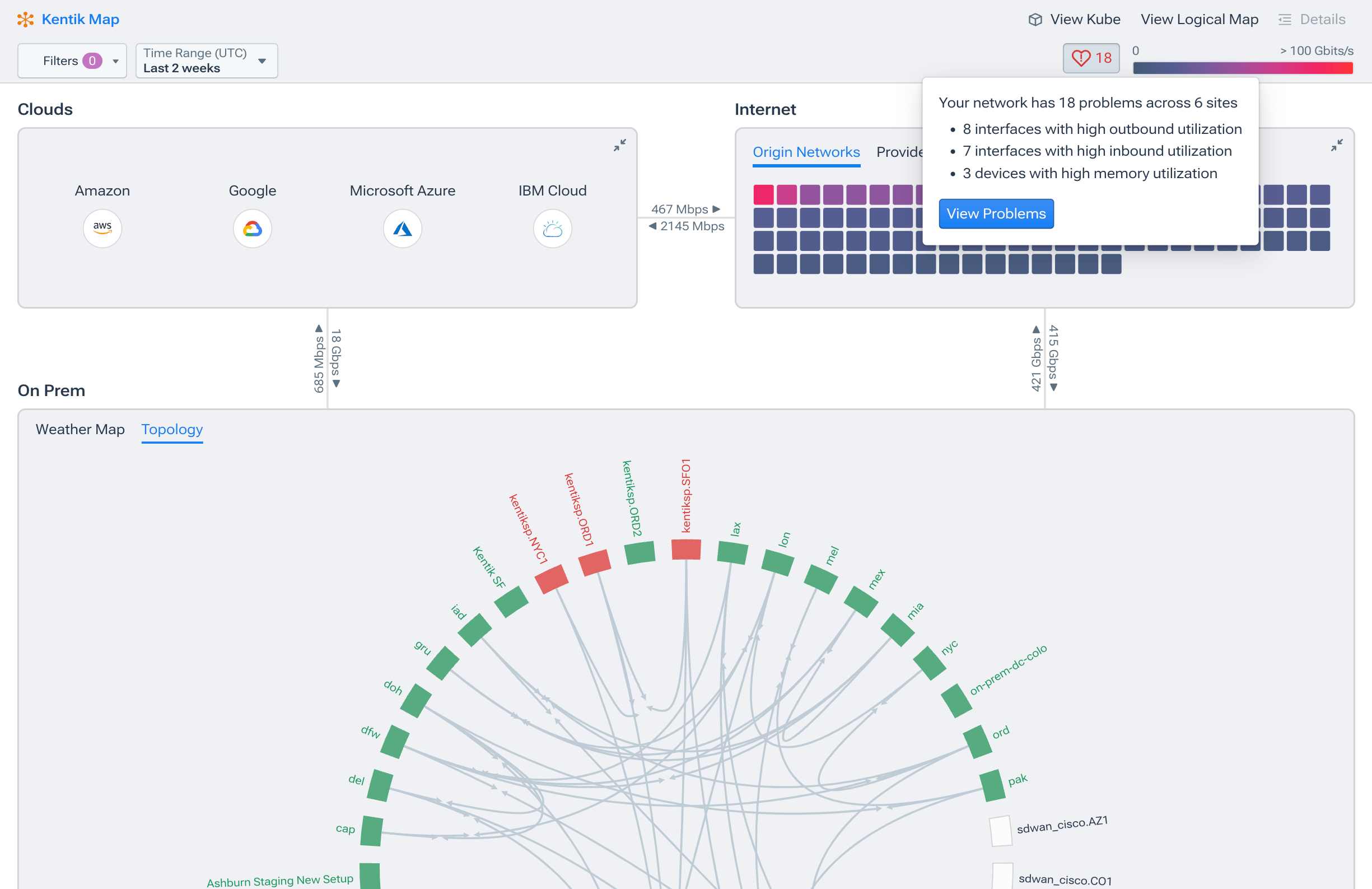

Kentik offers a suite of advanced network monitoring solutions designed for today’s complex, multicloud network environments. The Kentik Network Observability Platform empowers network pros to monitor, run and troubleshoot all of their networks, from on-premises to the cloud. Kentik’s network monitoring solution addresses all three pillars of modern network monitoring, delivering visibility into network flow, powerful synthetic testing capabilities, and Kentik NMS, the next-generation network performance monitoring system.

To see how Kentik can bring the benefits of network observability to your organization, request a demo or sign up for a free trial today.

Kentik’s comprehensive network performance monitoring solution delivers:

- Deep Internet Insights: Enables visibility into the performance, uptime, and connectivity of widely-used SaaS applications, clouds, and services.

- Intelligent Automation: Offers valuable insights without overwhelming users with unnecessary alerts.

- Comprehensive Data Understanding: Integrates SNMP, streaming telemetry, traffic flows, VPC logs, host agents, and synthetic monitoring for a holistic view of network performance.

- Multi-cloud Performance Monitoring: Monitors network traffic performance across hybrid and multi-cloud environments.

- Rapid Troubleshooting: Kentik’s network map visualizations enable swift issue isolation and resolution.

- Proactive Quality of Experience Monitoring: Optimizes application performance and detects potential issues in advance.

- Enhanced Collaboration Features: Promotes seamless coordination across network, cloud, and security teams through robust integrations.

- Advanced AI Features: Kentik AI allows NetOps professionals and non-experts alike to ask questions—and immediately get answers—about the current status or historical performance of their networks using natural language queries.

Related Network Performance Monitoring Topics

- Network Performance Monitoring Use Cases

- Network Performance Monitoring Metrics

- Cloud Network Performance Monitoring

- The Role of Network Observability in Modern Application Performance Monitoring

- Network Monitoring: Ensuring Optimal Network Health and Performance

- A Guide to Network Monitoring Protocols